Greetings from the last days of 2024. I’ve been busy wrapping up my classes and doing a lot of family holiday stuff, and I suspect many of you are similarly occupied. Yet I wanted to post this before January because a *lot* happened in the generative artificial intelligence space in December, and I wanted to get it all out in one go.

The tl;dr gist is major AI companies released a stack of new products, capabilities, and updates in fierce competition with each other.

Some caveats: this post is focused on US-based companies. Also, the sheer amount of stuff going on means I’m going to do more summary than analysis. I will try to show a few examples of functionality.

I’ll proceed by company.

(Also, this is not an end of year look back at the newsletter post. I’m reserving that for the upcoming anniversary of launching this thing. That said, if AI, Academia, and the Future has shown itself to be of value to you, please consider supporting us.)

OpenAI

The company conducted a release frenzy with its “Twelve Days of Shipmas.” This commercial AI Christmas included the launch of a new ChatGPT model, o1, which OpenAI says is more advanced than previous models, including improved math capabilities. Open AI also gave video generator Sora a public release (here are my test notes).

OpenAI announced another ChatGPT model, even more sophisticated, o3, but only as a closed demo and for a restricted group of researchers, as of now. o3 can apparently reflect on its steps of assembling a response, which the company calls a private chain of reasoning. It did very well on the ARC-Prize test.

Various incremental additions and improvements followed, from new APIs (“low-latency, multi-modal conversational experiences”) to better model fine-tuning, and expanded access to their search tool. Video input is now available. Apple Intelligence received a ChatGPT upgrade. Canvas mode received an upgrade. The Projects feature allows users to organize content and sessions. There is also a very retro turn, with a phone line: 1-800 CHATGPT.

Economically, OpenAI also opened a new subscription service, Pro, for the eye-watering price of $200 per month.

Alphabet (which we still call Google)

Google released a swarm of offerings to different degrees of openness and access, for its own Shipmas. The leading item was Gemini, or rather a series of Geminis: Gemini Ultra, Gemini Pro, Gemini Flash, and Gemini Nano. I was able to access some Geminis through this web app. AI Studio is another way in to explore Gemini. A subset of Gemini Flash, Flash Thinking Experimental, describes and checks its outputs in a chain of reasoning way. There is also a Deep Research version of Gemini.

Accessing some of these Geminis is actually easy, since Google is including it in Gmail, Google Maps, Docs, Slides, and Sheets, along with Drive and Meet, not to mention the Chrome browser.

Meanwhile, Google opened up waitlists for Veo 2 (generates video) and just made Imagen 3 (makes images) available. I tested the latter, asking it to image a university after climate change. Here’s one of the first results:

That’s a pretty detailed visual. I’m impressed by the varying depth: foreground scene (bottom left), a far off horizon (top), and a middle ground (center).

Imagen 3 also offered an alternative image:

Here we get four inset images on the left, each offering a different scene. Note the diversity shown by race, age, and gender.

After generating these pictures, the interface then offered a series of fine tuning terms to try. I settled on “intricate” then added one of my favorite keywords “solarpunk,” yielding these two results:

(I’m still waiting for the Veo invite.)

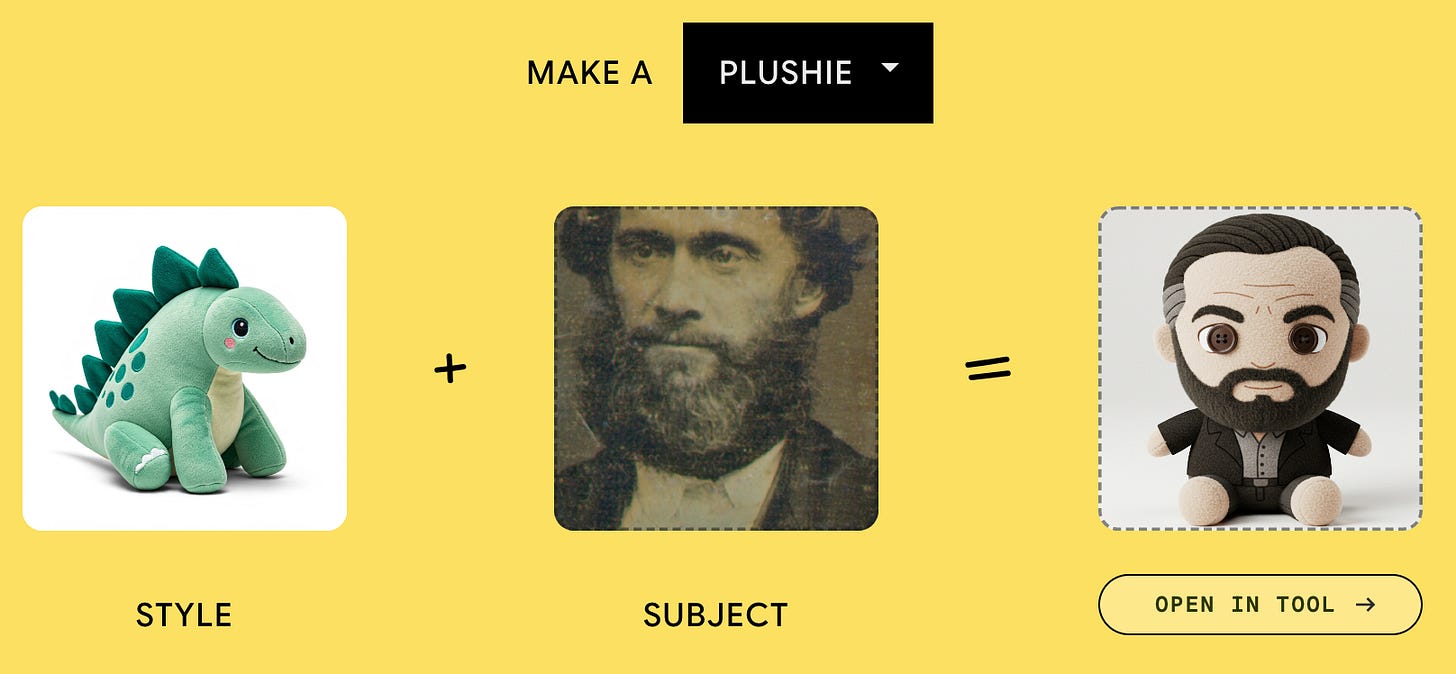

Building on Imagen is Whisk, an image remix service. There are several options in Whisk, including just creating images from scratch, but I tested one of the demo options. There Whisk offered me the chance to make a plush toy. It started with one image (an actual plush toy), then invited me to upload another image. On a whim I used a daguerreotype of an obscure yet fascinating American midwestern politician/religious leader, and Whisk made a toy (image) for him in a few seconds:

Note the “Open in tool” button on bottom left. Clicking this launched a new interface window with more options to edit the results and to generate others.

Then there’s Project Mariner, which aims to be an AI agent. Techcrunch describes it thusly:

After setting up the AI agent with an extension in Chrome, a chat window pops up to the right of your browser. You can instruct the agent to do things like “create a shopping cart from a grocery store based on this list.”

From there, the AI agent navigated to a grocery store’s website — in this case, Safeway — and then searched for and added items to a virtual shopping cart. One thing that’s immediately evident is how slow the agent is: There were about 5 seconds of delay between each cursor movement. At times, the agent stopped its task and reverted back to the chat window, asking for clarification about certain items (how many carrots, etc.).

That’s not available to the public yet, as far as I can tell.

Microsoft

Redmond announced a Vision spinoff of its Copilot AI chatbot. Vision looks like an agent which can work with some websites through verbal dialogue. For example, here’s a demo of someone planning a local trip for a family member:

Crucially, Copilot Vision works by drawing on your desktop and your actions, which requires a lot of permissions.

MS also released Phi-4, a very small (14 billion parameter) large language model - or small language model, SLM. Microsoft claims steady improvements in reasoning, a reduced footprint, and multimodal inputs and outputs.

Amazon

The “everything store” announced Amazon Nova, a foundation which will underpin Amazon Bedrock, the company’s build your AI application service, in turn based on Amazon’s cloud services. Through Bedrock users can now access:

Amazon Nova Micro, a text-only model that delivers the lowest latency responses at very low cost.

Amazon Nova Lite, a very low-cost multimodal model that is lightning fast for processing image, video, and text inputs.

Amazon Nova Pro, a highly capable multimodal model with the best combination of accuracy, speed, and cost for a wide range of tasks.

Amazon Nova Premier, the most capable of Amazon’s multimodal models for complex reasoning tasks and for use as the best teacher for distilling custom models (available in the Q1 2025 timeframe).

Amazon Nova Canvas, a state-of-the-art image generation model.

Amazon Nova Reel, a state-of-the-art video generation model.

That last one, Reel, is one they seem to be showing off, as many big AI efforts push hard for video outputs. Here’s one example they offer:

Also along video lines, Amazon claims their Nova-Bedrock-Pro model can understand and analyze video input.

One key detail, at least according to Amazon’s announcement, is that Nova “excel[s] at Retrieval Augmented Generation (RAG).” This is a major function for improving output quality.

Meta

Meta didn’t offer much this December, except for a watermarking tool for AI generated video content, Videoseal.

What can we derive from this flood of developments?

Obviously generative AI R&D is continuing at a feverish pace.

Multimodal generative AI is increasingly the norm. The apparent goal is for AI to produce text, images, and video, while accepting inputs across those modes. Video is the leading edge of this research and development.

Agents are also emerging as a leading AI development goal. This is an addition to generating content and interacting with users.

Companies are competing to offer chain of reasoning AI. The notional ultimate goal is artificial general intelligence, which I don’t have time to address here, but at present having AI generate a series of individual steps, then react to (including correcting) them, seems to be a major development field.

Nearly every company is using safety language and offering various safety-related services. “Safety” here seems to mean a combination of data security and not producing offensive content.

One language note: “canvas” seems to have become a generic (not trademarked) term for an LLM interface, wherein users can interact with the tool in parallel windows.

In terms of business models, OpenAI and Goo…alphabet seem most locked in competition. Both are following the old Microsoft model of releasing a range of services. OpenAI’s new price point is quite an attempt to boost subscription revenues.

Don’t miss Meta’s watermarking tool. Readers know I’ve been tracking this idea, especially for its potential for academic integrity (i.e., anti-cheating). Let’s see if anyone uses Videoseal - and if any other AI creator follows suit.

That’s all for now. It was quite a December. Now, on to 2025!

(thanks to Lance Eaton, Ruben Puentedura, and more friends for helping this post come to life)

Essential roundup there. Chinese somewhat equal in competitive breakthroughs during 2024.

May 2025 not see it all curve into warfare of various persuasions and spectrums.