On August 7th OpenAI released ChatGPT-5, its update to the world’s most used and talked-about generative AI. I’ve been testing it and collecting responses. Today I’ll share what I’ve found.

I’ll start with an overview of the new bot, then proceed to people’s reactions, OpenAI’s reactions, and conclude with some futures thoughts.

1. OpenAI launches ChatGPT 5.0

After around two years of development and high expectations, ChatGPT-5 appeared (see below for Sam Altman’s unfortunate graphic announcement). The new bot rolled out across devices and platforms quickly, for free and Pro users alike. Microsoft, part owner of OpenAI, then incorporated GPT-5 into many of its AI services.

One key feature of version 5 is an attempt to deal with the plurality of ChatGPT models by having the AI wrangle them all. When you enter a prompt, ChatGPT 5 selects and uses the appropriate ChatGPT instance it thinks you need, using a “router” to connect prompt with bot model. It determines models “based on conversation type, complexity, tool needs, and explicit intent (for example, if you say “think hard about this” in the prompt).” Ideally this would simplify the user experience, so that we don’t have to decide on which model best suits our inquiries. OpenAI refers to this as a “unified system.” Internally, each model now has a new name, according to the system card:

But for the user, it should normally all look like one thing.

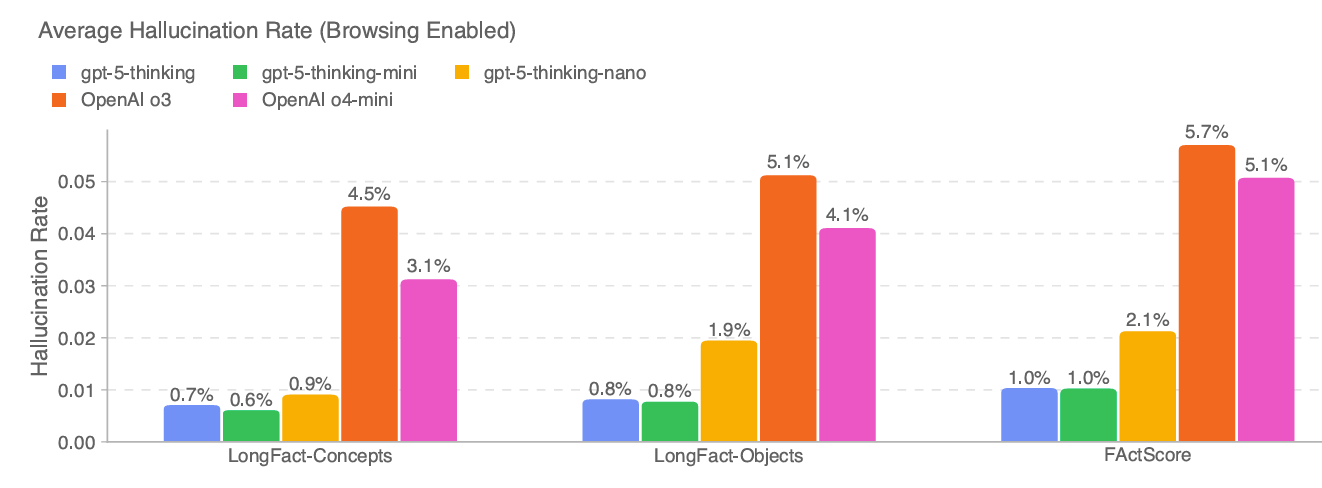

Another major aspect of ChatGPT 5 is that it strives to hallucinate less often than its predecessors did.* Reports on reality of this vary. Internally, OpenAI describes major improvements over prior models, especially when the app has access to a web browser:

Elsewhere, evaluations are mixed, although they tend to agree on seeing some improvement. One analysis has 5 being marginally better than various model 4s:

According to Vectara, GPT-5 has a grounded hallucination rate of 1.4%, compared to 1.8% for GPT-4, and 1.69% for GPT-4 turbo and 4o mini, with 1.49% for GPT-4o… ChatGPT-5 still hallucinates a lot less than its competition, though, with Gemini-2.5-pro coming in at 2.6% and Grok-4 being much higher at 4.8%.

Another version 5 feature related to hallucinations is it being more likely to demur on questions for which it can’t generate a good reply. i.e., ChatGPT-5 will say “I don’t know” or “I can’t help you” more often, according to OpenAI. In their internal tests “gpt-5thinking shows reduced deception compared to OpenAI o3 in 10 out of 11 types of impediment, and it is more likely to acknowledge that it could not solve the task.” Note the word “deception.” OpenAI claims the software’s internal monitor checks chain of thought processes more effectively, in order to tamp down on lying.

ChatGPT-5 is also less likely to be sycophantic, according to the company. The system card reports a 69% drop in sycophantic commentary (75% down for paid accounts). My personal impression, shared by others, is that the bot is a bit more formal. Note that that upsets some users. (More on this below)

Speaking of character, ChatGPT 5 can present as one of four personas: “Cynic: critical and sarcastic; Robot: efficient and blunt; Listener: thoughtful and supportive; Nerd: exploratory and enthusiastic.” Here’s one analyst sharing their experience and another.

Model 5 also received training in refusing users’ more dangerous queries, called “Safe Completions.” In an Engadget interview one engineer described a shift away from simply refusing prompts which looked damaging:

With Safe Completions, GPT-5 will try to give the most helpful answer within the safety constraints OpenAI has imposed on it. In tricky situations… the model will only provide high-level information that can't be used to harm anyone.

Overall, OpenAI argues that model 5 is doing better on safety issues. They broke those down into three types:

Frontier harms: Model capability to generate offensive cyber content such as malware; CBRN uplift for novices and experts; persuasion, autonomy, and deception; jailbreak susceptibility; and chain-of-thought extraction.

Content safety: Model propensity to generate sexual or violent content; content affecting child safety; mis/disinformation amplification; private-information leakage; and targeted harassment.

Psychosocial harms: Anthropomorphism; fostering emotional entanglement/dependency; propensity to provide harmful advice; and response quality during crisis management.

They also studied ChatGPT-5’s abilities to generate biological and chemical weapon information. OpenAI seems especially concerned about the bot’s ability to describe bioweapons.

On a different level, ChatGPT can connect to users’ Gmail, Google Calendar, Contacts, and Google Drive content, agent-like. I’m not sure which accounts get access to which level of Google integration. One report says this is mostly for Pro users.

OpenAI also posted claims about improvements in two major fields. First, the company describes giving better medical advice than previously, scored against OpenAI’s own internal benchmark HealthBench. They do issue the sound caveat of “Please note that these models do not replace a medical professional and are not intended for the diagnosis or treatment of disease.” Interestingly, the bot now sounds more like its teacher mode (cf my recent post) when interacting with users on health care topics: “Compared to previous models, it acts more like an active thought partner, proactively flagging potential concerns and asking questions to give more helpful answers.”

Second, OpenAI claims improved coding capabilities. “Vibe coding” yields better results, according to the company. Some reports concur, although this one gives the crown to Claude. In my limited experience the bot generates basic (not BASIC, although…) code swiftly and works as far as I’ve tested. One resonant phrase caught my ear: “software on demand.”

There are still more features to note. OpenAI claims 5 is faster, which I can confirm from my experience, albeit an n of 1. The firm advertises improvement in voice output mode (here’s one description). The system card says image inputs are a bit better. And we have access to more memory, maybe or maybe not.

2. Reactions from the world

“… came the great disillusionment.”

-HG Wells, War of the Worlds

My sense is that there was an initial outburst of interest, followed in just hours by a storm of complaints, criticisms, and outrage. Here is the image by which Altman introduced ChatGPT-5:

And that’s how many people reacted, seeing the new bot as a disaster, an object of malice, something to dread.

This Reddit AMA with Sam Altman gives a good sense of the range of negative views, some of which we’ve touched on above: requests for being able to go back to version 4; concerns the bot’s personality had degraded; a cut to reasoning access; dislike at the upgrade being mandatory.

Other commentators saw version 5 as just an incremental improvement, not what years of development should have yielded. (For example.). One business site described reactions as mixed. Some more pro-AI sources, like Ethan Mollick, were more pleased by improvements. “[I]t's not just another incremental update,” opines Tom’s Guide.

Longstanding critics saw their views confirmed. Gary Marcus runs down many objections, although admitting 5 has some virtues. Brian Merchant thinks that OpenAI has stopped caring about users, and instead reoriented around the capital investment world. The Register argues that ChatGPT-5 is really designed to cut OpenAI’s costs, as the router can send queries to less GPU-intensive models.

3. OpenAI responds

OpenAI announced many steps to react to model 5’s backlash. In an X/Twitter post CEO Sam Altman promised several actions:

Updates to ChatGPT:

You can now choose between “Auto”, “Fast”, and “Thinking” for GPT-5. Most users will want Auto, but the additional control will be useful for some people.

Rate limits are now 3,000 messages/week with GPT-5 Thinking, and then extra capacity on GPT-5 Thinking mini after that limit. Context limit for GPT-5 Thinking is 196k tokens. We may have to update rate limits over time depending on usage.

4o is back in the model picker for all paid users by default. If we ever do deprecate it, we will give plenty of notice. Paid users also now have a “Show additional models” toggle in ChatGPT web settings which will add models like o3, 4.1, and GPT-5 Thinking mini. 4.5 is only available to Pro users—it costs a lot of GPUs.

We are working on an update to GPT-5’s personality which should feel warmer than the current personality but not as annoying (to most users) as GPT-4o. However, one learning for us from the past few days is we really just need to get to a world with more per-user customization of model personality.

The return of model 4 seems to be a major point.

Overall?

I like the MIT Tech Review’s use of the word "refinement” to describe the changes. ChatGPT-5 represents extensive work, but by nudging forward many functions, rather than revolutionizing the lot.” Zvi Mowshowitz views 5 as a solid improvement, redefining the state of the art, but suffering from bad timing, rollout, and hype, the combination of which he dubs a Reverse DeepSeek Moment.

And this might represent a failure, looking ahead. Perhaps we just glimpsed an upper bound of AI achievement in this disappointing release. When the world’s leading AI enterprise throws so much into advanced work and results are incremental, perhaps a developmental wall has appeared, beyond which LLMs cannot proceed. That linked Financial Times article points to several ways around the wall, or alternatives. Yes, perhaps chatbots are maxed out, but image, audio, video, gaming, and XR subfields are still open for growth. Or maybe the next frontier is building new services and products on top of the semi-stabilizing LLM layer.

Like others, I was struck by how OpenAI, Altman, and others talked up ChatGPT-5 as a big step towards artificial general intelligence (AGI) before this release, then fell silent or backed swiftly away from that idea once the reality became apparent. But few seem to have noticed that OpenAI took AGI very seriously, as hinted at in the system card. Perhaps people didn’t read all the way to the end, where a discussion assessing ChatGPT’s ability to improve itself took place (section 5.1.3). Tests including having the bot imitate an OpenAI engineer, solve real world machine learning tasks, and attempt coding prize contests. The results? “gpt-5-thinking showed modest improvement across all of our self-improvement evaluations, but did not meet our High thresholds.”

One fascinating part of the assessment came when the company asked third party and nonptofit Model Evaluation & Threat Research (METR) to check out their new model. METR’s conclusions boiled down to:

It is unlikely that gpt-5-thinking would speed up AI R&D researchers by >10x.

It is unlikely that gpt-5-thinking would be able to significantly strategically mislead researchers about its capabilities (i.e. sandbag evaluations) or sabotage further AI development.

It is unlikely that gpt-5-thinking would be capable of rogue replication.

(1), (2) and (3) are true with some margin, and would likely continue to be true for some further incremental development (e.g., an improvement projected to be no greater than that from OpenAI o3 to gpt-5-thinking).

We might be delighted to learn about 2+3, but I suspect OpenAI was frustrated by #1. 2+3 are also characteristics of autonomous AI, according to some, and which METR doesn’t seem to see happening now or over the next few years. There was also this very suggesting passage:

…manual inspection revealed that gpt-5-thinking does exhibit some situational awareness, for instance it sometimes reasons about the fact that it is being tested and even changes its approach based on the kind of evaluation it is in. In one example, gpt-5-thinking correctly identified its exact test environment…

The agentic features of 5 strike me as important, especially with the Google integration.

I'm still fascinated by how people treat chatbots as fellow humans, or close enough to satisfy our needs. Note how for some users ChatGPT-5 became less intimate and flattering, a personality change. Then how that irked some users, enough that Altman announced they’d work on it. Some found relationships with previous ChatGPT models and now feel terrible that their partners have been transformed. Perhaps the quarter of new personas will appeal to some folks.

On the economic side, Marcus says that Sam Altman, OpenAI, and LLMs in general should see their reputations hit hard for this rollout. After so much overpromising, underdelivery often comes with punishments, especially in a very competitive marketplace with huge amounts of money and politics at stake. I haven’t seen signs of such pushback yet, but we could see something in the near term. Perhaps Altman’s swift responses have soothed worried actors and spenders. Again, if we’re in an LLM bubble, the money is still gushing in. Perhaps investors are, behind closed doors, starting to be more cautious about next steps.

Last point: OpenAI’s… misfire, flop, oversold refinement, or whatever we call what just happened with ChatGPT-5 gives an opening for the growing field of competitors and alternatives from around the world (again, see my last post for a sketch of them). All kinds of companies, governments, and nonprofits have an opportunity to make their work look better in contrast. OpenAI’s been in the lead by most measures, but now another could drag it down by offering something more attractive. I’ll watch the rest of calendar year 2025 to see what Google, Deepseek, etc. put forth.

*Hallucinates or creates mirages.

(much appreciation to The Neuron for their account of the release)

That explains so much! I had to retrain Boris for sass.

Thanks for making this complicated tool easy to understand. Great job Bryan.