Generative AI at the end of 2024

A scan of some technological developments

As 2024 shambles to a close generative AI continues to develop at a fast pace. It spreads across the technological world into new functions and domains.

With this scanner post I’d like to share very recent developments and stories. I’ll identify some themes and trends I see therein.

Agents are increasing as an AI subtopic of interest. Agents are, in theory, a next step for large language models (LLMs) beyond chatbots and media creators. The idea is for agents to access databases, desktop applications, documents, and the internet. It’s an old idea, but getting new life with new projects. Here’s a good writeup describing a whole stack of functions needs for agents to function, including frameworks, tool libraries, memory, and model serving.

More examples are popping up. Microsoft has issued a series of agents for CoPilot, including:

The Employee Self-Service Agent in Business Chat quickly answers common policy questions and makes it easy to complete key tasks, starting with HR and IT. It can help employees understand their benefits, for instance, or request a new laptop. And it can be customized in Copilot Studio to meet the unique needs of your organization. In private preview.

Other agents include Facilitator, which takes real-time notes in Teams meetings and chats, and Project Manager, which automates plan creation and even completes tasks in Microsoft Planner. In public preview. [bold and italics in original]

(Microsoft doing agents? I’ll resist the usual Clippy jokes. For now.)

Elsewhere, Anthropic has published a Model Context Protocol - in open source - which aims to provide “a new standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments.” There’s a lot in there, such as “specification and SDKs…Local MCP server support in the Claude Desktop apps…An open-source repository of MCP servers.” This is a big step in agent development.

Meanwhile, Google claims to have developed an agent good enough to find security threats in the real world. The agent’s name is the very noir Big Sleep, stemming from Google’s Project Zero and DeepMind. The official announcement describes the event having taken place this fall, and states that “We believe this is the first public example of an AI agent finding a previously unknown exploitable memory-safety issue in widely used real-world software.”

AI in web search The AI search wars are heating up, as OpenAI released a ChatGPT-powered web search tool. Right now it answers queries with prose summaries and links to several sources. It also seems to scan the web in real time. Here’s a good comparison of SearchGPT to Google Search and Microsoft’s Bing, with many points of comparison: images, sources, ranking, etc. Columbia Journalism Review found many news media cites were wrong; Search Engine Journal found more than three quarters of all of its references were incorrect.

Remember that Google includes AI in its world-leading search service. Microsoft does as well in Bing.

The AI browser? A report that OpenAI is making a web browser is circulating. No technical details are available, although the initial report apparently (paywalled) says OpenAI is talking with publishers.

An interesting design problem: what might an AI-first browser look like?

More video tools Meta is preparing to unleash Movie Gen, a tool allowing us to turn out up to 16 second long video clips from text prompts and/or a photo. The official webpage adds that users will be able to edit video from text prompts, and also offers some charming clips. There are also rumors that Amazon is about to unleash Olympus, an image and video generator.

Shifting from video clips to videoconferencing, Heygen announced users could send their avatars into Zoom meetings, including “multiple Zoom meetings, simultaneously.” Zoom’s leader has previously expressed interest in such a thing. I haven’t tried this yet.

Audio tools and interfaces NVIDIA announced Fugatto, an LLM audio generator. Users are able to enter text commands to yield music and other sounds (apparently; it’s not public yet).

Here’s the Guthub site. On a related audio front, Elevenlabs released GenFM, an iOS app which generates audio of two human voices in conversation. Why yes, that sounds a lot like Google’s NotebookLM.

As a broader audio level, Louis Rosenberg makes the case for an audio-based interaction with AI, which he dubs the Whisperverse. The whisper idea is that AI can augment a user’s knowledge during a conversation.

Open source AI continues to develop Mixtral released Pixtral, a very large language model, open source, which can respond to text and image inputs. “Pixtral Large is the second model in our multimodal family and demonstrates frontier-level image understanding. Particularly, the model is able to understand documents, charts and natural images…”

For a quick test, I logged into the Mixtral chat, then uploaded this image:

Pixtral described it thusly:

In the image, I see a cartoon depiction of a bear. The bear has a somewhat stern or grumpy expression on its face. It has dark brown fur on its head and lighter brown fur on its muzzle and body. The bear's eyes are looking slightly to the side, and its eyebrows are furrowed, contributing to the overall grumpy appearance. The background appears to be outdoors with a blue sky.

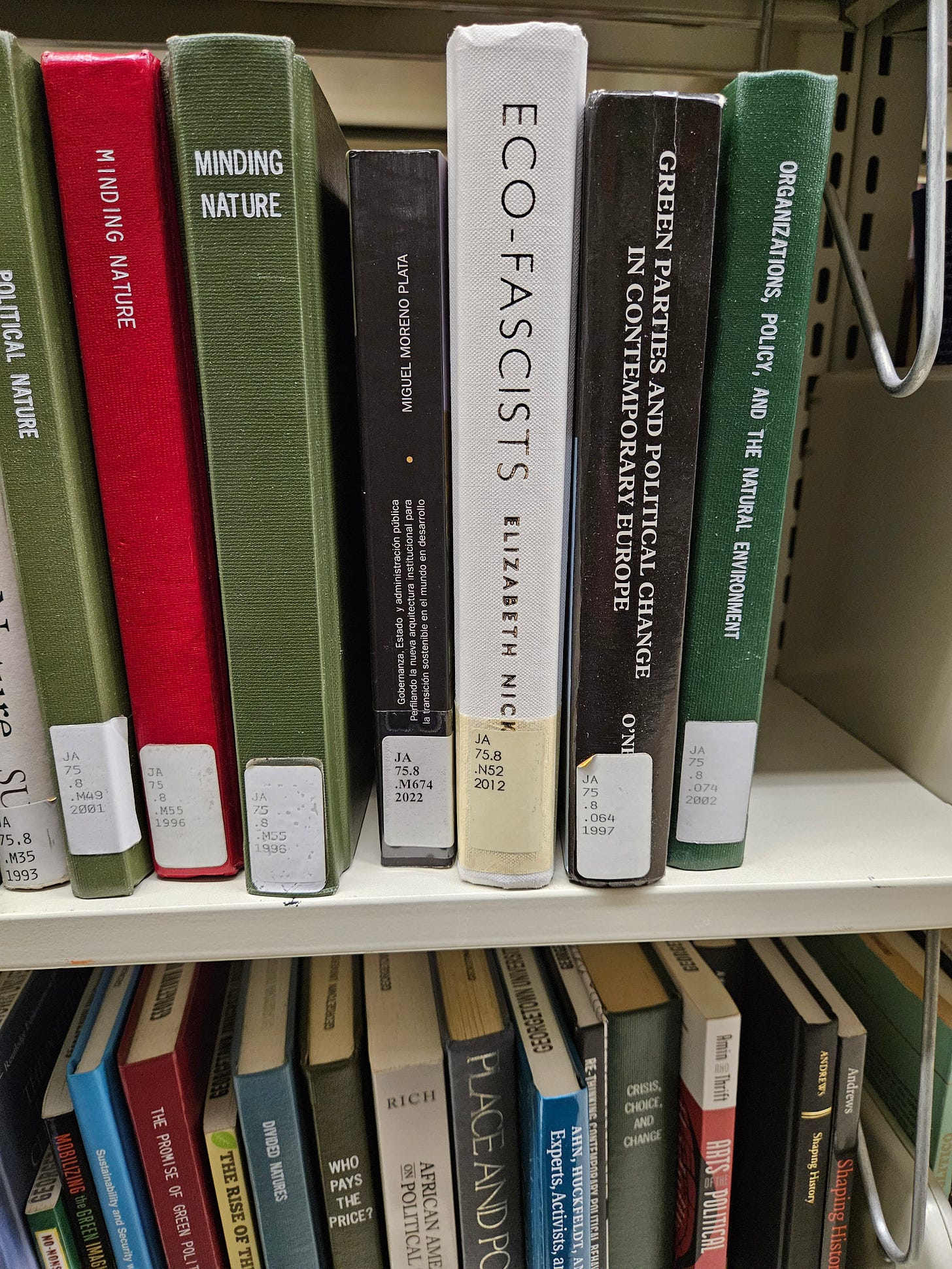

Pretty good. I then uploaded this photo of a library bookshelf:

Pixtral described it:

In the image, I see a shelf filled with books, likely in a library or bookstore. The books are arranged vertically, and their spines are facing outward, displaying their titles. Here are some of the visible book titles:

"Organizations, Policy, and the Natural Environment"

"Green Parties and Political Change in Contemporary Europe"

"Eco-Fascists" by Elizabeth Nick

"Minding Nature"

"Political Nature"

The books appear to be related to topics such as environmental policy, green politics, and ecological studies. The shelf is organized, and each book has a label or tag, possibly for cataloging purposes. The background includes more books, suggesting a larger collection.

Decent, but holds back from OCR-ing most of the visible text.

Another open source project appeared from Qwen, owned by Alibaba. QwQ-32b-Preview is a chatbot like OpenAI’s 1o, meaning it can reflect on its output and show some kind of reasoning, or so says the development team. Here’s an analysis from Techcrunch.

One more open source development: Meta launched a smaller version of its open source-ish* Llama AI, small enough to run on smartphones. Meta claims these apps are not only smaller but faster than previous iterations. How does this work?

The advancement uses a compression technique called quantization, which simplifies the mathematical calculations that power AI models. Meta combined two methods: Quantization-Aware Training with LoRA adaptors (QLoRA) to maintain accuracy, and SpinQuant to improve portability.

Is generative AI stalling out? A Bloomberg article reports on skepticism about the next round of leading LLMs. Quality and timelines are slipping for iterations of ChatGPT, Claude, and Gemini:

It’s become increasingly difficult to find new, untapped sources of high-quality, human-made training data that can be used to build more advanced AI systems. Orion’s unsatisfactory coding performance was due in part to the lack of sufficient coding data to train on, two people said. At the same time, even modest improvements may not be enough to justify the tremendous costs associated with building and operating new models, or to live up to the expectations that come with branding a product as a major upgrade.

If they’re running out of human-generated content, they can create artificial stuff. But that runs into problems:

“It is less about quantity and more about quality and diversity of data,” said Lila Tretikov, head of AI strategy at New Enterprise Associates and former deputy chief technology officer at Microsoft. “We can generate quantity synthetically, yet we struggle to get unique, high-quality datasets without human guidance, especially when it comes to language.”

OpenAI’s CEO Sam Altman briefly and apparently dismissed this analysis.

On the hardware AI integration front Chinese technology giant Baidu demonstrated smartglasses running its ERNIE AI software. The Financial Times reports it should hit the market next year.

What themes and trends can we derive from these developments?

The push for agents is likely to continue for at least the short term. I’m wary, though, based on the many technological, institutional, cultural, and behavioral challenges a real agent ecosystem must defeat in order to exist, much less thrive. Here’s a good, cautionary description of the rough road ahead.

OpenAI is ramping up its competition with Google, between competing on search (Google’s centerpiece) and apparently in the browser space (Google’s Chrome is a leader). I do wonder just how much pressure Microsoft is placing on its junior partner here.

The possibility that generative AI is hitting a wall is of supreme importance. If true, it means that: AI firms will more aggressively go after human generated online content; they might pursue more partnerships with publishers and other intellectual property holders; financial capital might sour, as I’ve warned. Where does that pent-up investment of money, human talent, and energy go? I suspect it’ll push into robotics, so watch for more AI-hardware integration as a signal.

On Meta’s incredible shrinking AI: this is a very important and often neglected trend, for two reasons. First, mobile devices (primarily smartphones) are in many ways the leading ways humans interact with the digital world. Designing and offerings AIs for handhelds is therefore a solid way to get the tech into as many hands as possible. Second, the energy and water demands of generative AI are notoriously immense, if often overstated. Shrinking down the computational needs can at the least take the edge off that problem, if not address it seriously. Third, making tech more efficient and smaller is a deep, powerful technological trend we’ve seen for more than a century. We should start thinking about microAI in the future.

One final futures thought: I’ve been musing about rising AI fitting into human civilization in a particular way. We’ve already seen people using AI to make and do stuff, yes, but there are signs of AI serving as an intermediary between people, often several AIs. An AI agent will fit into the role, as when it buys a product for me from a small business. Someone on the job market may use AI to identify the best places to apply, while firms use AI to screen out poor applicants. Bumble’s CEO imagines a near future where people on the dating scene each use AI to sift through candidates. On the hardware side of things we’re already seeing bots fighting bots in Ukraine and the Middle East.

I think of this as an automation layer in human activities, an intermediary which increasingly fits into our lives. We’ll have to learn how to best use stuff in that layer, which may involve refusing it. And, of course, for the AL to exist technologists and related professionals will have to build it out. Cf the above caution about an agent ecosystem.

In the meantime, all best from my automatons to your automation layer inhabitants.

*Yes, there’s a lot of debate over just how open is Meta’s open source AI. I don’t have time for that today. Maybe in another post.

Great info and summary; thank you, Bryan. I just did a video on AI supposedly running out of data and slowing down: https://youtu.be/exm56cPVuTc I argue that it is untrue and more of a schematics issue. I really liked that you brought up "microAI" in that this will be important for all mobile apps so that we will have AI at all times, even without the Internet. Once we have AI robots everywhere, the major issue will be human relationships with the AI. We will need to seriously address aspects of appropriate relationships and societal norms.

Very comprehensive summary. Thanks for sharing.

How AI agents interact is a huge unopen question. Error handling alone is mind-boggling to consider. Deploying these things in real world applications will be… interesting.