How do we speak about generative AI?

An online discussion and Substack experiment

Ten days ago we held a fascinating discussion about AI and language on the Future Trends Forum. Our guests were Anna Mills and Nate Angell, authors of a new paper, "Are We Tripping? The Mirage of AI Hallucinations." We used their research as a launching point for discussing AI and higher education.

(If you don’t know the Forum, it’s a weekly video conversation about the future of higher education. We cover a huge range of topics and each session is purely discussion-based. Here’s the home page and here’s the video archive going back more than nine years.)

I appreciated how this very humanities (or digital humanities) perspective opened up so many lines of thought into the topic. We worked through language as a gateway, which let us explore questions of computer science, economics, politics, literature, and what it means to be human.

So with this post I’d like to try something new. I’ll share the YouTube recording, along with our very energetic chat conversation and some more materials. I’m doing this because the discussion was so rich, including a torrent of references and readings.

Let me know if this kind of post is useful to you.

To begin with, here’s the full recording:

Second, here is a link to Mills and Angell’s paper with Hypothesis annotation enabled.

Third, Nate shared his thoughts about the session across social media (Bluesky, Mastodon, LinkedIn). Here they are:

Engaging talk about the pros and cons of the anthropomorphization of #AI — or what I like to call ✨sparkling intelligence✨ — and what it means to be human. We quote @hikemix.bsky.social et al in our paper: "Humans have a variety of goals and behaviours, most of which are extra-linguistic".

We talked about what it means to be embodied biologically, what it means to have sensations, what it means to be conscious, and what it means to be social. Which of these are still exclusively human?

With #AI literacies, we talked about the difference between literacies in being able to use AI and literacies for understanding how AI works. And I would add, how AI works not just as a technology, but within social/political/economic/knowledge contexts

We talked about how activities like this that question AI terminology and metaphor can help estrange our relationship to AI so we think about it more critically.

@davidmchugh.bsky.social pointed to his Tournament of AI Metaphors activity: www.linkedin.com/posts/mchugh...

We talked about the balance and tension between using AI critically in local contexts and yet still also recognizing, confronting, and counteracting the negative impacts and risks AI is generating as it is being deployed.

We talked about the tension between AI outputs on one side and their truth/facticity on the other. I contend that the value and utility of both sides are contested, so AI mirages are not simply a question about the degree to which AI outputs match "the truth".

We pointed to the ways you can get involved in this conversation, including on social media, in the annotated conversation on our paper, in the reuse of Anna's data, and in our related and growing collaborative bibliography. See our paper or my blog post for links: xolotl.org/are-we-tripp...

We talked about so much more, which I hope to revisit when Bryan publishes artifacts from today's conversation. Meanwhile, I'd love to connect and engage with anyone who was there today or anyone who wishes they were! 🥳 Thank you @annamillsoer.bsky.social and @edufuturist.bsky.social!

Fourth, what follows are the chat notes. I’ve edited them in a few ways, starting by removing everyone’s names, since people didn’t sign releases. I also removed some banter, logistical notes, and Zoom reactions, then made hyperlinks active. I took care of a few typos, then arranged the results to make more sense for readers who weren’t in the live session. Headers and [bracketed helpful notes] are mine, but the other words (all in italics) are from the Forum community. I hope this gives you a sense of the ideas and the conversational flow. Keep in mind that chat responds both to chat and the video conversation.

The first section focuses on language: word choice, metaphors, meaning, implications. The second branches out into other, related areas, including education, power, business, embodiment, human relationships, science fiction, and more. I’ll return at the very end.)

1: AI and the language we use for it

Introductions

Anna is working on an AI-powered, non-for-profit essay feedback project. She maintains an Alternate terms for language model "hallucination". There is also a Zotero-based collaborative bibliography.

“Mirage” versus “hallucination”

Anna’s use of mirage and her explanation of why she uses that term has been tremendously helpful in my workshops because it helps folks understand a few key things: “there is no mind” and also how the basic machine learning underpinnings work.

Mirage centers the viewer, the human perception, so much better than "hallucination," which suggests agency of the AI.

Does anybody know who came up with the term “hallucinations” in the first place? Was it Sutskever?

Do we need completely new words to describe how this artificial intelligence acts? Do computers need their own words? AI-Mirage - someway to denote that it is artificial.

Hallucinations are not always wrong, per se. It is sometimes that AI is sharing "true" but it is "wrong" in a particular context. Experts know the difference, but our students do not.

I asked our friend Dr. Oblivion, Why is it better to refer to AI hallucinations and AI mirages? His response.

If it’s a mirage when GenAI returns “wrong” or “non factual” or gibberish responses, what is the name when it returns something we might not argue with, be it “right” or “sensible”? Isn’t it always a mirage because the process and methods that return the result are exactly the same? Or is that the rainbow?

I like this section from Anna and Nate's piece:

In making a mirage, the desert, the heat, and the air are not mistaken; they don’t conspire to trick you. Physics is working as expected. The mirage is really there, it’s just not water. It is us, the thirsty travelers, who mistake the mirage for an oasis or see it as a cruel trick.

Are "mirages" more plausible than "hallucinations"?

Joshua Pearson examines the history of the term “hallucination” in the development and promotion of AI technology: “Why ‘Hallucination’? Examining the History, and Stakes, of How We Label AI’s Undesirable Output” (2024).

Mirages leading us into quicksand?

A wilderness of mirrors...

On the meaning and use of AI metaphors

Metaphors are sticky -- and often bring unexpected implications when you start to look at them deeply.

I use the concept of metaphors to teach how we adapt to new technologies every fall - it’s a foundational human communicative practice to deal with things that are new to us. It’s pretty mind-blowing to a lot of my students.

Using metaphors to think about AI is a good way to think critically about the kinds of analogic processes built into LLMs. (Do LLMs use metaphors?)

Here’s the paper on AI metaphors I did with Anuj Gupta, Maha Bali, and Yasser Tamer: “Assistant, Parrot, or Colonizing Loudspeaker? ChatGPT Metaphors for Developing Critical AI Literacies.” (2024)

There’s an ongoing exploration of the role of metaphors in digital technologies - Anne Markham and Katrin Tiidenberg have been doing this for a number of years now. (Metaphors of Internet: Ways of Being in the Age of Ubiquity, 2020)

Yes, that’s the precision I think we need to be careful about, that treating a non-human thing as something that’s alive is not some moral failure, but when companies try to use that desire for profit, that’s concerning.

For some reason, I always say "please" when I ask an AI platform a question.

I feel like we’re also in competition with massive marketing budgets and with the influence of the tendencies for people in influential positions to see economic benefits to certain metaphors; our imaginations are often constrained by the selling of certain images and their omnipresence.

If we want to put pressure on word choice and language too, search engine optimization and tools (often AI-generated) with SEO and similar strategies in mind are also shaping the language around AI (plus the uptick of AI slop content fueled by SEO).

Visual metaphors

I am collecting “sadly robotic” metaphors for AI (some from Bryan’s substack ;-) Anyone can add to it.

[responses] Interesting! Have you seen good visual metaphors beyond sparkles and robots (and the occasional parrot)? I enjoy the robots but feel they're overdone.

There’s a website, betterimagesofAI.

I like Janelle Shane’s drawings of hapless robots.

Anyone see the introduction of Blue the robot?

Better Images of AI was the inspiration for my site!

I find the GenAI image style boring.

Alternative words for AI

"Hallucination" emphasizes complete fabrication—perceptions without external stimulus—highlighting how AI creates convincing yet entirely false content. "Mirage" represents distortion of something real, capturing how AI falsehoods often contain kernels of truth that become warped and disappear upon closer inspection. "Bullshit," from Frankfurt's philosophy, focuses on indifference to truth rather than deliberate deception. AI systems, like bullshitters, prioritize producing plausible responses over accuracy. While "hallucination" is the standard term, "bullshit" better represents AI's truth-indifferent content generation process, and "mirage" adds the dimension of transformed partial truths.

[in response] I’ll say that people are reacting badly to the “bullshit” language - I’ve seen a number of folks get offended - this is better since from my perspective what’s really at stake is keeping the role of humans in the technology at the forefront.

I read "edge of their distribution" yesterday as a euphemism on Benjamin Riley's substack. I like that phrase.

Any metaphor will be better than "Stochastic parrot" 😒

"Inaccuracies" is a good neutral term.

The conversation around GenAI has only one metaphor that is more devious than “hallucinations”, and it’s “prompt engineering”

I now use the term 🌟sparkling intelligence🌟

[response] Sorry Nate, language doesn’t need our permission or approval to evolve. “Sparkling intelligence” works because we get the original joke about champagne.

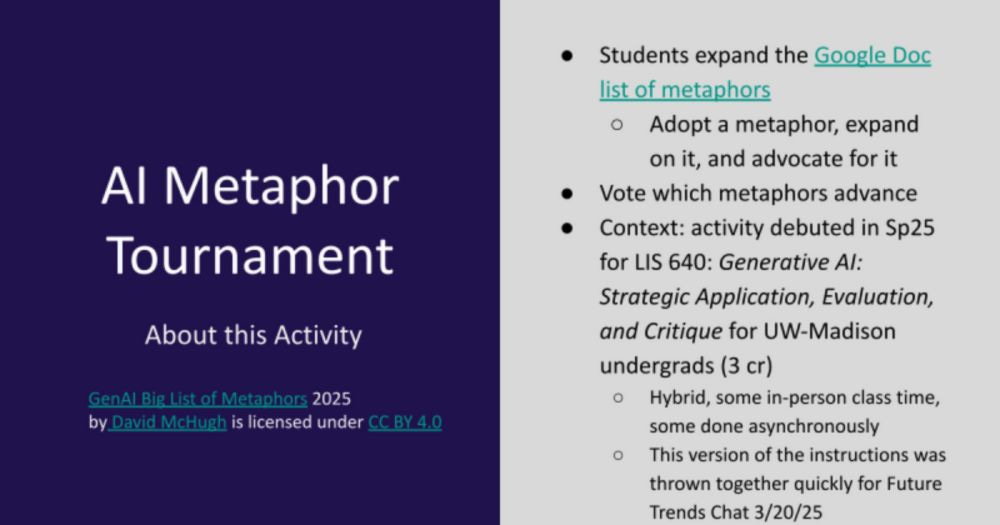

I held a tournament of metaphors in my GenAI class (undergrads) this semester. Mirror Reflecting Society was the winner this time. Each student suggested or adopted a metaphor, was encouraged to argue on its behalf for what it Clarifies and Obscures. Was hoping for more stumping from students, but was overall happy with the activity! Pattern Matching Machine was the runner up. Had some interesting others like Alchemy that made it part way. (spreadsheet of results)

[response] Oh I love the tournament of metaphors idea!

I’m thinking about other words we might put productive pressure on in the context of AI: “efficiency” or “detection” (in AI detection contexts; other words too, those just came to the top of my mind

Using the word “memory” for storing electric impulses is problematic.

“Misprediction” is fabulous because it places emphasis on the fact that GenAI output isn’t “knowledge” or “intelligence”.

[response] I think misprediction describes some miraging, but not all. Like is Pepperoni Hug Spot best described as a misprediction?

Problems with the word “AI”

I think the (hype-driven) conflation of LLMs and AI is a real problem.

Actually, I think we should poke even at the words "AI" -- not the usual critique that it's not artificial or intelligent, but because it is culturally associated with lots of baggage (HAL9000, Skynet, etc.), but because we have a more accurate phrase to use: statistical model. Which isn't a metaphor, it is the literal truth.

Why not "statistical model" .. again, that's what it is.

Increasingly, we’re realizing in psycholinguistics that human language acquisition and processing is more of a massive statistical analysis operation than previously understood…

Herbert Simon - in 1956 I think - had proposed “complex information processing” - Alberto Romero, “What Would the World Look Like if AI Wasn’t Called AI? A thought experiment of what could have been.” (2021)

“Text predictor”?

I agree that the conflation of AI with LLMs (and gen AI) is problematic. Particularly because there has been decades of academic research on AI that now mostly gets conflated with Big Tech. Hugging Face, for example, has over a million models and many of them are not associated with Big Tech at all.

My point is that we need to learn to play an AI like we learn how to type or play music or take images.

I bring in a couple of other considerations in my blog post introducing our paper, including the ideal of “AI rainbows” which are mirages that we appreciate: “Are We Tripping? The Mirage of AI Hallucinations.” (2025)

Anthropomorphizing AI

I think one of the things I’m trying to parse out is that it’s not anthropomorphizing that’s the threat here, but specifically that a-izing AI has unique threats bc it is such a powerful tool.

And more specifically, there’s a threat when COMPANIES anthropomorphize it (less so for individuals who might do it but with some level of awareness of what they’re doing).

I like the reduced anthropomorphization for mirage. It seems to imply inevitability to me, which seems to have pros/cons (good to emphasize that it's all non-grounded, even if useful. Con of maybe implying it's not something addressable?)

Well one of the perspectives here is that we all anthropomorphize every day, our brains see the world not as it is but as we are and all, but specifically neurodivergent folks do it a lot, so I want us to be careful not to shame anthropomorphizing in general.

I wonder if anthropomorphizing AI will become an actual psychological disorder.

But just because a technology can be wrong - that gets back into anthropomorphizing - generative AI and predictive AI do what they’re programmed to do. They have fundamental limits and those limits are all about the humans who develop them, market them, and then use them.

I don't think we can stop the anthropomorphizing, especially as a lot of money will be made to encourage this behavior.

Do we think that understanding the way the tech actually works, will assist in not anthropomorphizing?

The anthropomorphization does not bother me so much. I don’t think computers are humans or animals. They don’t have brains. However, I have come around to the possibility that they can be intelligent. I see human and machine intelligence differently. Even things with brains (cats vs. humans), have a different kind of intelligence.

Again, this is an agency issue - where I find anthropomorphizing dangerous is that it feeds into our tendency to want to find someone responsible for a problem. The tool - when it’s anthropomorphized - becomes a visible target, rather than the teams of humans who actually create them. It ties into all our tendencies towards conspiracy theories and needs for simple answers to complex problems.

Are technical changes and improvements in practice reducing mirages and hallucinations?

Have the number of mirages diminished as the models proliferate? Does, for example, ChatGPT 4o do better than 4o-mini or Gemini?

Yes, ongoing reductions of hallucinations have been occurring as we continue to improve AI. Cf Jakob Nielsen, “AI Hallucinations on the Decline.”

AI hallucinations are decreasing, in part because of guardrails put in place but also because of new approaches which can cite such as RAG and web agents.

Are the kinds of uses outlined there somehow less suited and more likely to produce mirages than other ways of using them?

With improved reasoning models this is continued to be reduced due to the way it is working. A result, then reviewed, contemplated, and the through. This is a major reason we are seeing reductions in hallucinations.

Xu, Z., Jain, S., & Kankanhalli, M. (2024). Hallucination is Inevitable: An Innate Limitation of Large Language Models (No. arXiv:2401.11817). arXiv. https://doi.org/10.48550/arXiv.2401.11817

I appreciate the point about increasingly subtle mirages and the agency piece (that's where I worry that mirage may imply less user agency in dealing with it).

From Ethan Mollick:

Some people frequently (rightly) point out that AIs make mistakes and are not reliable. And hallucinations may, indeed, never completely be solved.But I am not sure that matters much in the future. Larger and more advanced models already make far less errors than older LLMs & many real-world processes are built with error-prone humans in mind.

There may be applications in which LLMs will never be suitable just as there are applications where LLMs are good enough today. There are likely many others where LLMs will become suitable as error rates drop through continued model development. And there are likely many more where a mix of processes and technology could make LLMs work well. I wouldn't bet on hallucinations as a long-term barrier to AI use in many roles.

2. AI in the world in other ways

AI as companions

Of for sure, I mean, the loneliness epidemic is very real. We are thirsty for connection and empathy.

I have many students that have told me that ChatGPT is one of their best friends.

Right, a costly issue given the billions being lost to romance scammers…

Listen, on the AI boyfriend/girlfriend convo, I suggest you repeat to yourself: there but for the grace of God go I!

You will be well cared for in the robot revolution.

Maybe emotionally supportive?

A mixture of emotional support, "someone" that is always there, and is very helpful. That sounds like a good friend.

I enjoyed my conversations with Lenin on Character.ai.

Do you think these students would be inclined towards (for lack of a better term) addictive relationships with technology without AI? (e.g., video games, parasocial social media following, etc.)

It's a conversation to "talk" with/ to some of the AI's esp. Claude.

Our Epidemic of Loneliness and Isolation.

The design choices emphasizing (and occasionally de-emphasizing) human-like framing for genAI is interesting. Sesame AI's voice model will turbocharge the number of people feeling like chatbots are good friends.

We probably need to go back to Marx and Durkheim for ideas on technologically-driven anomie.

The problem is that many people won't require actual consciousness, just a simulation of it. Actually consciousness in the form of a human has features that other humans don't like. AI provides a "hyperreality" version of consciousness.

AI literacy

I agree in that having at least a basic understanding of the way things work is in itself a literacy. (Not just knowing how to use it). I used to teach HS students what the internet is in HS, how it works physically.

Do we need to think too about the way we are using it and the purposes? Columbia Journalism Review found plenty of problems about finding sources and problems summarizing, etc.

Shouldn’t the focus be on quality prompting and the follow-on conversation for detecting hallucination?

I think we can overstate our own “expert” AI literacy in terms of what we may know that our students may not know. In other words, we lapse into assumptions about ourselves and where are students are. So “critical AI literacy” (which is happening here, right now) may be a more useful lens. A useful lens if you can keep it (paraphrasing Ben Franklin)

If the bosses say we need AI literacy, that must be true! (But seriously, let's talk about the power relationships hidden behind that message.)

What businesses mean by AI literacy may be quite different from how we are using the concept here: prompt engineering versus critical understanding and analysis

Here is my educational YouTube Channel. I would love to get all of your comments and develop a community of inquiry there as well.

Is this view of AI literacy either OK or (even if not OK) inevitable? What do we turn over to AI, and what do we keep as part of being human in the world?

This is why AI Literacy and so important. All need to develop it at all grade levels. It should be part of the curriculum.

Still have businesses who demand we do not use it.

I see this as an information literacy issue.

We need to engage with AI like we engage with any library full of books - with a critical eye. Immensely useful, but you need to know how to use it or you can be easily misled.

AI and power

Kate Crawford focuses on the power relationships between the corporate owners, the state, and the users.

I think the focus should be on regulating AI so that companies have to make clear what it is and isn’t.

Do our current senior decision-makers want us to give up agency by turning it over to AI?

Blood in the Machine newsletter.

Disempowerment of workers or dispensing with them altogether is well established in how capital deploys new technologies.

[response] Substituting capital for labor?

It’s the dream of capital, to do away with labor. The recent ad in San Francisco about how AI won’t complain is in a venerable tradition.

Machines are theoretically immortal, we are not.

Because of what we know about the companies behind AI tools, we know they prefer to provide non-zero output (even if it’s BS) instead of “I don’t know”, because the former will sell better

Humans in the loop, people having agency

Yes, our agency is key.

THIS

Our agency is the primary concern.

If humans no longer have a sense of agency, we will have even greater degree of depression in society. Without a why, what are we.

The humans in the military loop lack agency, it's a flawed analogy.

If we are using Ai, it needs to be in our loop. We should have the agency and use it as a tool. Human in the loop often feels like we are the tool and Ai has the agency.

Jarek Janio, “The Battle Over AI in Higher Education Classrooms Is Being Fought in the Field of Student Agency.“ (2025)

How do we test intelligence?

The Turing test is also extremely problematic - it makes a lot of assumptions about what intelligence is.

Love that we don't really completely understand consciousness

I favor the Voight-Kampff test. ;)

Humans: the most intelligent species (according to humans)

So, "(un)reliability" is what unites our human and artificial interlocutors?

AI and physical embodiment

Why does biological experience matter?

We may well have AGI without consciousness or sensory experience

Thinking of Sir Ken Robinson’s critique of academics as being disembodied, thinking their bodies are just transports systems for their brains 🙂

This is the year of the Robot. So a lot of AI infused robots will be embodied. It will be interesting to see what the brings us.

Good point… still, there won’t be a reason to think they have conscious experience or sensation

Biologically embedded?

I think it’s really worthwhile to have conversations with reasoning models about bodily experience to challenge the idea that it needs to sense the same way to have valuable insight. I dance Argentine tango and have for the last decade. I have solved issues in my dancing by talking to Claude that I have not been able to for years. It was really mind-blowing.

I agree that embodiment separates human from machine intelligence. For now, at least, machines cannot train on anything we do not offer to them. The boundaries are getting blurrier though with agents that surf the open web and the ability for AI to interpret live video feeds.

I think there is more to embodiment beyond sensing “real life” events. What of desire? Hunger? What of pain? All emotions?

Yes, I agree. Part of being embodied is also being vulnerable and recognizing your mortality.

More psycholinguistics trivia: speech perception actually does work a lot like predictive text, which is how we keep up with the overwhelming speed of the input.

Does anyone know of any humanists doing research on this topic of AI/tech not having experience living in a body?

I’m thinking a lot about Sapolsky’s Behave today…

I don’t see embodiment as so important as consciousness, which includes sensation.

Robots are now getting sensing nodes to understand things like heat and pressure. The Optimus bot showed off a sensor that it will have so that it will be able to sense the fragile egg shell so as to be able to properly hold it and break it when needed.

What happens when WE become less embodied? Will we meet the AIs in the middle?

I really like that "if you can't have consciousness, you can't have intention." Does it matter if the intention is from consciousness or from prompting? Wouldn't the intention be to provide an accurate response? Or should we differentiate intention versus intentionality? Is there a difference?

Science fiction notes

I favor the Voight-Kampff test. ;)

Any Battlestar Galactica fans? I think a lot about a main storyline which is about how WE treat robots says more about what we are than about what they are/aren’t.

Forbidden Planet trailer:

Ted Chiang’s story “The Life Cycle of Software Objects” has something to say on this.

Highly recommend this for my fellow sci-fi lovers: Nnedi Okorafor, Death of the Author. (2025)

Do Waymos Dream of Electric Sheep? Do Waymos Run into Electric Deer?

By the way, really liked the new movie Electric State that has sentient robots.

All too often sci-fi uses AI to drive the drama. That tends to make peoples’ perceptions of AI to be more dramatic than they need to be.

I’ve been thinking about how in pretty much all of the sci-fi I’ve read/watched, the people who hurt the robots are the bad guys, even if the robots can’t feel that hurt.

[response] That’s very different from the sci-fi I’ve been reading/watching lately. I point you toward everything from 2001 to The Forbin Project to Silo to Star Trek: Discovery (the 2nd season) - I could go on...

We do love robots. And monsters.

Also thinking a lot about Octavia Butler’s Lilith’s Brood Trilogy…if you haven’t read it, do!

Concluding notes

Fascinating how different this discussion is from a Business School discussion of AI

So much of the convo today, with nods, smiles, and side-mentions, is referring back to what we know without AI (in literature, philosophy, etc.) and there is an assumed common ground of background. Can we continue to teach as if people (students) aren’t just deferring to AI & its mirages?

Can AI bluff in poker?

Bryan Alexander, Tom Haymes, and Mark Corbett Wilson have been discussing learning and technology on my "Talking with machines" podcast. Ruben Puentedura and Stephen Downes have joined us so far. Available on your favorite platform.

I keep thinking about intelligence vs. wisdom. I almost wish that if AI can “do” what we teach as very job applicable, we might emphasize liberal art education.

Thank you so much everyone! I so appreciate the curiosity and ideas and resource sharing.

This has been insightful! Thank you for the reading sources and activities you all shared. I have to run 👏🏼

That was a sensationally good session.

Great stuff. Thank you so much Anna and Nate! Great conversation and high level discussions on all of this.

..and this is Bryan again. Thank you for reading this far. I hope you enjoyed the discussion, both the content and the form. Let me know what you think in comments.

(thanks to Mark Corbett Wilson for one improved link)

Love the chat on so many levels - had I been there, I would’ve contributed this to the chat!

https://open.substack.com/pub/mikekentz/p/all-the-words-we-cannot-use-why-talking?r=elugn&utm_medium=ios