Pinging the scanner

AI and education stories I'm tracking

I’d like to try an experiment today. I’m going to share some of the future of AI and education stories which grabbed my futurist’s attention over the past week, with some comments on each. If this kind of scanning is useful to you, please let me know, and I can keep doing it.

One good introduction to generative AI appeared. The Financial Times published a very accessible and quite informative long page explaining how generative AI works. It’s in snowfall style, meaning it unfolds various media in addition to text as you work your way down the page.

I’d like to use this one in class. Recommended.

One note: I’m skeptical of many comparisons between AI and blockchain, but they do seem to share a common pedagogical theme of difficulty. And no large language model (LLM) explainer has emerged so far to rule them all.

Two big developments connected Bard to other Google functions . First, Google linked Bard to a bunch of in-house services. Users can direct Bard to search their content hosted elsewhere in the Google empire: Docs, Drive, Flights, Gmail, Maps, YouTube, and others. Users can turn this off and Bard won’t train itself on that content, or so Google says.

Second, Bard now has a button which triggers a search and correct function, Googling for information to double check what the AI just shared.

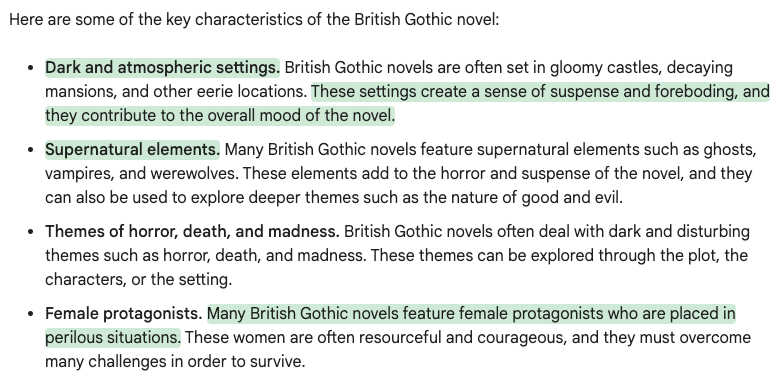

For example, I asked Bard to “tell me about the British Gothic novel.” (I did PhD work on this, so it’s one whose results I can assess.). Here are the results. I asked Bard to double-check, and after it thought for a few seconds, the app colored in some of its responses, like so:

That green highlight means Google search agreed with Google Bard. In contrast, a sort of tan color means “Google Search found content that's likely different from the statement, or it didn't find relevant content.” For example, I asked a deep future question about humanity’s lifespan on this planet, and the double-check yielded one different/relevant highlight:

I’m not sure what that check yielded in this case, to be honest.

This linking of services is what I’ve been anticipating for a while, given Google’s sprawling series of projects and the company’s internal commitment to AI. It looks like OpenAI is starting to do something similar, linking DALL-E to ChatGPT, at least for a small group of testers.

Further note: Bard’s double-check is a way of addressing the hallucination problem. Watch for more efforts like this, as LLM textbot errors continue to be an issue.

More AI instances in the world. Financial giant Morgan Stanley launched the AI @ Morgan Stanley Assistant for its staff, apparently using ChatGPT 4.0 as its engine. Amazon piloted a chatbot to assist product reviewers in writing. Warner Music invested in an AI-generated popstar, Noonoouri. Microsoft and Project Gutenberg used AI to create audio versions of thousands of books. Zoom launched an AI assistant.

Takeaway: interest in AI is still building. We haven’t reached peak yet. Expect more and more generative AI instances in various walks of life.

One nation starts buildings its own AI. Japanese corporations and that nation’s government invested in creating generative AI using the Japanese language. The argument is that ChatGPT et al can’t do a competent job with the language, mangling details, subtleties, and cultural context.

There are two projects in the Nature article. The first uses a supercomputer: “Backed by the Tokyo Institute of Technology, Tohoku University, Fujitsu and the government-funded RIKEN group of research centres, the resulting LLM is expected to be released next year.” Another has a particular focus: “Japan’s Ministry of Education, Culture, Sports, Science and Technology is funding the creation of a Japanese AI program tuned to scientific needs that will generate scientific hypotheses by learning from published research, speeding up identification of targets for enquiry.” Then there’s a third:

Japanese telecommunications firm SoftBank, meanwhile, is investing some ¥20 billion into generative AI trained on Japanese text and plans to launch its own LLM next year. Softbank, which has 40 million customers and a partnership with OpenAI investor Microsoft, says it aims to help companies digitize their businesses and increase productivity. SoftBank expects that its LLM will be used by universities, research institutions and other organizations.

This is something I wrote about back in February. LLMs appeared in the world and sought to be globally useful. (Sought, I say, not succeeded.) It was inevitable that sub-global projects would appear, based on combinations of resources and initiative. We should expect more of these from nations, and also for other groupings: religions, corporations, and so on. If you’re interested in thinking about a potential decline of globalization, perhaps this new wave of AI will serve as a useful example.

Note, too, the important role universities play in these projects.

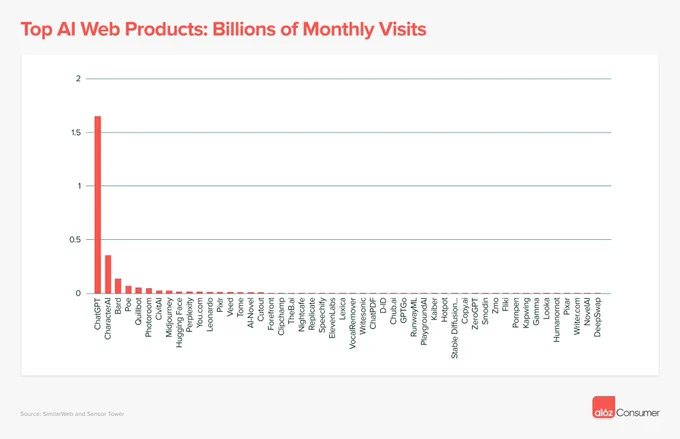

Ranking AIs. Venture capital firm Andreessen Horowitz (a16z) analyzed online traffic to various AI services and compared them. The far and away more used AI app was…

…ChatGPT. Coming in second? Character.ai, which normally gets very little discussion.

Takeaways: first, ChatGPT is the emperor of AI as of now, if this data is reliable. That means Bard et al will have to struggle to catch up. Second, Character.ai’s high ranking suggests perhaps they’re doing something peculiarly right, and it’s worth investigating. My hunch is that plenty of lonely people are delighted to interact with something human-ish. (cf Replika)

More lawsuits. The Authors Guild organized 17 writers to sue OpenAI, citing “the harm and existential threat to the author profession wrought by the unlicensed use of books to create large language models that generate texts.”

The point here: this is another opportunity for a judge to smack down ChatGPT, as I’ve said before. Think of how many services now depend on that service’s API. Think, too, of the possibility of judges setting precedents and/or inspiring legislation.

Using AI in medicine. A group of researchers checked ChatGPT and Bard against professional humans in ophthalmology. OpenAI did well here:

ChatGPT using the GPT-4 model offered high diagnostic and triage accuracy that was comparable to the physician respondents, with no grossly inaccurate statements. Bing Chat had lower accuracy, some instances of grossly inaccurate statements, and a tendency to overestimate triage urgency.

Given the crying need for skilled health care, it’s unsurprising to see such experiments. We should also expect medical organizations to deploy AI to depress worker compensation.

More AI in education. An AI-backed English language tutor application, ELSA (not ELIZA), launched. The University of Maryland is hosting a webinar on using AI in social work.

Takeaway: as said before, we haven’t reached peak AI, nor has the hype collapsed, neither in higher education nor in the wider world. We should expect more of these efforts.

That’s all for today’s Scanner. Please let me know if you’d like me to keep doing this kind of thing, and/or if you have any requests for topics, sources, layout, etc.

(thanks to Ruben Puentedura, Shel Sax for some links and ideas)

Yes. Very much appreciate the scanner, as you call it. Thanks so much!