Today I’m sharing some stories of emerging AI as part of my scanner series. I do this to be open and transparent about my research, and also to stir discussion about the topics.

I’ve broken them down into technologies; society, culture, and policies; then educational developments.

TECHNOLOGIES

Expanding AI molecular analysis Google’s AlphaFold is increasing its coverage to more molecules, including most proteins, thanks to a new model. What it claims now: the ability to “predict accurate structures beyond protein folding, generating highly-accurate structure predictions across ligands, proteins, nucleic acids, and post-translational modifications.” I’m especially impressed by its ability to “frequently reach… atomic accuracy.”

An effort to build AI lab assistants Future House announced its ten year mission to develop AI lab assistants, specifically for biology. An ex-Google CEO invested heavily in this effort.

Why this might matter: it shows the persistent interest in the assistant model. (I hope to follow up on this point in the next issue.) Note, too, the focus on the life sciences “because we believe biology is the science most likely to advance humanity in the coming decades, through its impact on medicine, food security, and climate.” We might see more efforts along this AI-bio axis.

Using AI to cut back AI hallucinations A Chinese research team has developed Woodpecker (scholarly paper; open source code) which attempts to correct LLM hallucinations without manual intervention. It uses a Multimodal Large Language Model (MLLM) to work through five stages of correcting visual errors:

(1)Key concept extraction identifies the main objects mentioned in the generated sentences;

(2) Question formulation asks questions around the extracted objects, such as their number and attributes;

(3) Visual knowledge validation answers the formulated questions via expert models…

(4) Visual claim generation converts the above Question-Answer (QA) pairs into a visual knowledge base, which consists of the object-level and attribute-level claims about the input image;

(5) Hallucination correction modifies the hallucinations and adds the corresponding evidence under the guidance of the visual knowledge base.

Here’s one example from the paper:

A few thoughts: I’m still trying to figure out how this works and if it’s a real advance over manual correct. But if it can work, it’s a big step. Notice how the authors are all Chinese; China remains a world leader in AI.

SOCIETY, CULTURE, POLICY

Using nothing but generative AI to produce a computer game One Javi Lopez used ChatGPT to produce code for a basic computer game - without doing any coding himself. He then turned to Midjourney and DALL-E to produce assets and the result is a playable game.

Takeaways: first, I want to ask my students to try this. Second, it’s another example of potential job loss to be, if game designers use this approach instead of hiring teams. Third, it’s not a fully fledged game authoring tool, but a practice using apps with other purposes. That’s a bit like what I’ve been seeing in video production: using AI to build pieces, which the human then assembles.

Major national and international AI statements appeared. An international group including China and the US (!) met in Britain and issued the Bletchley Park Declaration. The document has many generalities and platitudes, not to mention fond hopes of industrial self-regulation, but I note its emphasis on one bit of language or computing: “Particular safety risks arise at the ‘frontier’ of AI, understood as being those highly capable general-purpose AI models.” That’s a delicate way of gesturing in the direction of artificial general intelligence. There is also a call for “an internationally inclusive network of scientific research on frontier AI safety.”

Meanwhile, American president Biden issued an “Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence.” Note the similar focus on safety. Biden leads off by invoking the 1950 Defense Production Act to order

companies developing any foundation model that poses a serious risk to national security, national economic security, or national public health and safety must notify the federal government when training the model, and must share the results of all red-team safety tests.

I have no idea how that might hold up legally, but it’s ambitious. A second part of the order concerns watermarking: “The Department of Commerce will develop guidance for content authentication and watermarking to clearly label AI-generated content.” According to professor Anjana Susarla, that DoC move builds on a National Institute of Standards and Technology (NIST) framework published earlier this year. This is also ambitious, but depends on a technology which isn’t viable yet, and also has no teeth for getting businesses to go along, as the MIT Tech Review notes. The latter problem applies to the red-team requirement as well.

Note: two senators introduced a bill requiring companies to comply with that NIST framework. This goes further than Biden’s order.

HIGHER EDUCATION

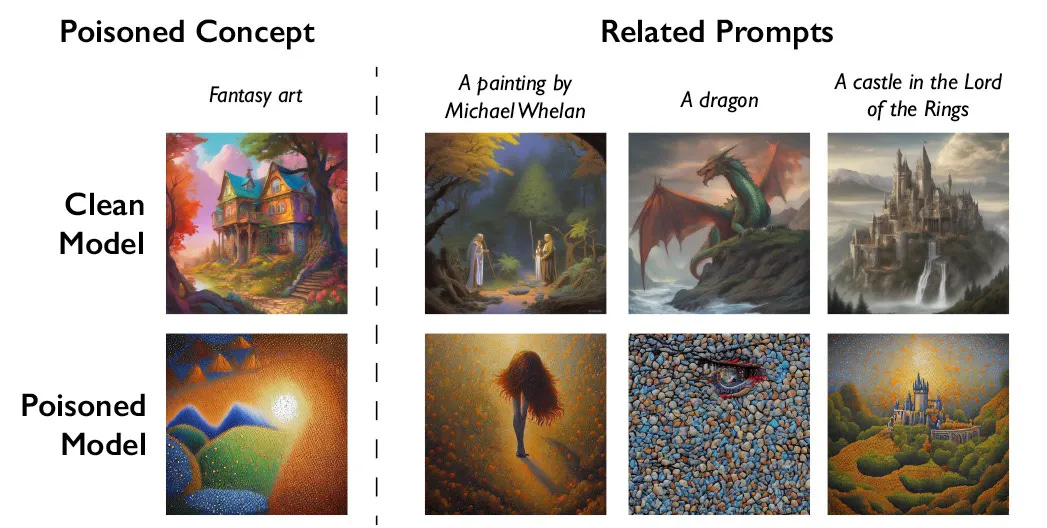

One professor launches an attack on LLMs. A University of Chicago computer scientist, Ben Zhao, recently built an application called Glaze. Glaze alters a visual artist’s work so that it appears differently in a training dataset, preventing their creations from being used and repurposed. Now Zhao has a new feature for Glaze, called Nightshade. Its aim is to poison AI datasets:

Nightshade exploits a security vulnerability in generative AI models, one arising from the fact that they are trained on vast amounts of data—in this case, images that have been hoovered from the internet. Nightshade messes with those images.

Artists who want to upload their work online but don’t want their images to be scraped by AI companies can upload them to Glaze and choose to mask it with an art style different from theirs. They can then also opt to use Nightshade. Once AI developers scrape the internet to get more data to tweak an existing AI model or build a new one, these poisoned samples make their way into the model’s data set and cause it to malfunction.

What would happen next?

Poisoned data samples can manipulate models into learning, for example, that images of hats are cakes, and images of handbags are toasters. The poisoned data is very difficult to remove, as it requires tech companies to painstakingly find and delete each corrupted sample.

For example,

The researchers tested the attack on Stable Diffusion’s latest models and on an AI model they trained themselves from scratch. When they fed Stable Diffusion just 50 poisoned images of dogs and then prompted it to create images of dogs itself, the output started looking weird—creatures with too many limbs and cartoonish faces. With 300 poisoned samples, an attacker can manipulate Stable Diffusion to generate images of dogs to look like cats.

I’m not sure when Nightshade will be available. The Glaze website leads with this line: “NightShade is on its way. We will announce availability via @TheGlazeProject on Twitter/Instagram.”

Why this matters: first, it’s an example of the resistance to generative AI I’ve been talking about for a while. It won’t destroy, say, DALL-E, but could throw some sand in its digital gears. We should anticipate more examples of this - and therefore actions by AI firms to oppose them.

Second, it’s an instance of academics in particular actively opposing AI, not just through critique but through actions. I haven’t seen much discussion of Nightshade in the academic world, yet I do wonder how campus IT would approach it, or how using the app in one’s work would fit into hiring/promotion reviews.

Librarians grapple with AI Inside Higher Ed reports on various ways academic librarians are responding to generative AI. These include:

Graduate information/library programs teaching grad students about AI

Librarians creating AI resources (for example)

Librarians helping institutions craft AI policies

Incorporating AI into the next ACRL information literacy guidelines

That’s all for this scanner. I hope this research round-up is useful. Please let me know. And if you’d like me to adjust it - focusing on a particular area, writing more, writing less, etc. - the comments box awaits you.

‘China remains a world leader in AI’ - I think this phrase needs a little parsing. Friends in comp sci tell me that Chinese scholarship and research is often shoddy, plagiarized or just wrong. They understand the value of pr now, but are probably still way behind. (Recent New Yorker article goes into depth on the faltering economy and social realm in China as well). I’d connect the two and probably say a lot of it is for show.