One thing I do in this newsletter is share results of my environmental scanning work around AI and the future. I scan a wide range of domains, from technology to the natural world to culture and more. Today I’m posting what I’ve found in the political world as it engages with AI. Specifically, here are some ways politicians and government officials are increasing efforts to regulate or use AI.

We can start with the European Union, which started implementing the simply and aptly named AI Act. It has several components which will take hold over the next year, including requiring AI enterprises to conduct regulatory compliance assessment. The official webpage includes these aims:

address risks specifically created by AI applications

prohibit AI practices that pose unacceptable risks

determine a list of high-risk applications

set clear requirements for AI systems for high-risk applications

define specific obligations deployers and providers of high-risk AI applications

require a conformity assessment before a given AI system is put into service or placed on the market

put enforcement in place after a given AI system is placed into the market

establish a governance structure at European and national level

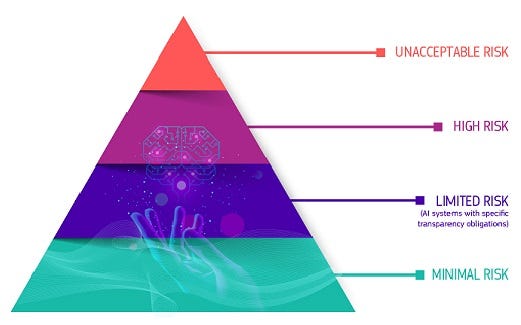

The AI Act also sets up a European Commission AI Office. Additionally, it establishes a system of tiered AI risks:

However, the law is still in early days with a great deal of rule-making ahead. Watch that space.

Across the English Channel, the new Labour government shut down £1.3 billion which the previous administration had budgeted for “the creation of an exascale supercomputer at Edinburgh University and a further £500m for AI Research Resource.” This wasn’t an anti-AI move so much as part of a broader austerity agenda, as far as I can make out; I’d like to hear from British readers on this.

Elsewhere in the Anglosphere, the Australian government published a list of voluntary guidelines for AI work. These recommendations are for “developers and deployers of AI systems” to:

1. Establish, implement, and publish an accountability process including governance, internal capability and a strategy for regulatory compliance.

2. Establish and implement a risk management process to identify and mitigate risks.

3. Protect AI systems, and implement data governance measures to manage data quality and provenance.

4. Test AI models and systems to evaluate model performance and monitor the system once deployed.

5. Enable human control or intervention in an AI system to achieve meaningful human oversight.

6. Inform end-users regarding AI-enabled decisions, interactions with AI and AI-generated content.

7. Establish processes for people impacted by AI systems to challenge use or outcomes.

8. Be transparent with other organisations across the AI supply chain about data, models and systems to help them effectively address risks.

9. Keep and maintain records to allow third parties to assess compliance with guardrails.

10. Engage your stakeholders and evaluate their needs and circumstances, with a focus on safety, diversity, inclusion and fairness.

Meanwhile, regulatory action is rising in the United States. The National Telecommunications and Information Administration (NTIA) (part of the Department of Commerce) issued issued recommendations for the government to support publication of AI weights.

Various actors are pressuring for more federal action. Seven Representatives sent a letter to the Federal Election Commission (FEC) to clearly state its position against political deepfakes. Grok’s graphic generator comes in for particular attention:

The proliferation of deep-fake AI technology has the potential to severely misinform voters, causing confusion and disseminating dangerous falsehoods. It is critical for our democracy that this be promptly addressed, noting the degree to which Grok-2 has already been used to distribute fake content regarding the 2024 presidential election.

Here’s my attempt to get Grok to show George Washington endorsing Kamala Harris for president:

Another actor seeking to pressure the feds is a scientist, who staged a compelling White House event to call for more regulation:

Rocco Casagrande… a biochemist and former United Nations weapons inspector… entered the White House grounds holding a black box slightly bigger than a Rubik’s Cube. Within it were a dozen test tubes with the ingredients that — if assembled correctly — had the potential to cause the next pandemic. An AI chatbot had given him the deadly recipe.

It was safe, of course, because not completed. I’m surprised this event hasn’t gotten more attention.

Elsewhere in America, on the state level, California’s legislature took a major step as both of its houses approved the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act (SB 1047). The law would (from what I can tell; it was seriously amended recently) mandate that very large projects (measured by capitalization of $100 million and higher) would have to include a single shut-off button, publish reports about their safety protocols, and set up a state board, the Board of Frontier Models, to monitor AI. AI firms would have to protect whistleblowers. Further, “California’s attorney general can seek injunctive relief, requesting a company to cease a certain operation it finds dangerous, and can still sue an AI developer if its model does cause a catastrophic event.” There seems to be a threshold of $500 million in damages for that last point.

While it won clear legislative majority support, SB 1047 has also elicited opposition, and hence the amendments. Nancy Pelosi, perhaps the most powerful American politician, came out against it. Fei-fei li opposes it. Interestingly, Elon Musk supported it. California’s governor has yet to sign it.

What do these scanner events tell us?

The intersection of AI and politics continues to heat up. Political deepfakes are starting to appear, if not in great numbers. Perhaps rumors of AI political content exceed the reality, as we’ve seen Donald Trump accuse the Harris campaign of faking crowds. Meanwhile, OpenAI announced they broke up an Iranian election influence effort, consisting of users crafting political content:

The operation used ChatGPT to generate content focused on a number of topics—including commentary on candidates on both sides in the U.S. presidential election – which it then shared via social media accounts and websites.

The reasons we’ve given for states to intervene in AI still hold: fears of human damages, concerns about economic harm, worries about missing potential disasters, and responding to social pressure. If political figures increase their use of AI we should expect more government action… and for AI firms to engage.

An example of the latter sees Microsoft partnering with Palantir to provide AI services to the United States military. That’s one of the most highly regulated situations a government can do. We could see it as a high stakes mutual trust exercise.

We should also expect developments in other domains to influence this building regulatory wave, notably events in the technology itself, of course, as well as economics and culture.

(thanks to Ruben Puentedura for one fine link)

Surface level high intensity PR 'events' and 'claims' drive big capital and techno-optimism, but it's starting to look like the crypto scam whitepapers at this point. With the difference being a dangerous substratum involving self-propagated dev-vectors that actually do deliver services, just not with the utility that the naïve and all too often gullible have bought into, eg:

"The AI Scientist scheme doesn't sound legit for 2024. The so-called "innovative system" uses large language models (LLMs) to mimic the scientific process. It can according to the media, generate research ideas, design and execute experiments, analyze results, and even perform peer reviews of its own papers. The researchers claim that The AI Scientist can produce a complete research paper for approximately $15 in computing costs.

Considering the explosion of AI papers and academic papers in general often with ChatGPT elements, Generative AI's impact on science may not be as good as is often portrayed. Deskilling academics doing the hard work of research analysis could be problematic. A PhD candidate is expected to be performing original, independent research with the help of a supervisor and a co-supervisor. Not is all what it seems.

A lot of claims are being made in how good Generative AI is at coding and science but we need to be very careful. Sakana AI employed a technique called "model merging" which combines existing AI models to yield a new model, combining it with an approach inspired by evolution, leading to the creation of hundreds of model generations."

https://www.readfuturist.com/what-is-the-ceiling-for-nvidia-backed-japan-based-sakana-ai-sakana/