Some AI developments in May 2025

Scanning a rush of releases and announcements in the past few weeks

Greetings from a late May which is meteorologically very pleasant if politically and economically chaotic. I find myself enjoying lovely breezes and sunlight as I read about and listen to stories of the world in polycrisis.

May also saw a series of AI announcements and releases, which is the subject of this newsletter. Actually, there were quite a few developments in a short period of time from a number of major players in the emerging AI industry. Yes, it’s time for a technology scan.

(If you’re new to this newsletter, welcome! My scan reports are examples of what futurists call horizon scanning, research into the present which looks for signals of potential futures. We can use those signals for trend analysis. On this Substack I do scan reports on various domains where I see AI having an impact, such as politics, economics, culture, education… and technology, today’s theme.)

There’s a lot to explore here. I’ve divided the following by businesses or business-like entities, followed by some quick reflections as time and space allow.

OpenAI

OpenAI announced Codex, a cloud-based code development agent in research preview. It can apparently handle multiple coding tasks at the same time while also answering questions (in prose) about what it’s up to. Apparently a user can delegate (in natural language) functions to Codex, such as writing or debugging code. A TechCrunch article puts it thusly: “The goal is to operate like the manager of an engineering team, assigning issues through workplace systems like Asana or Slack and checking in when a solution has been reached.”

Users will access Codex as part of the $200/month Pro plan.

Microsoft

Microsoft announced 50 or so developments, so I’ll try to hit the highlights.

Microsoft announced a coding agent. This one works with CoPilot and lives in Github repositories. Users can get it working with Visual Studio commands or by toggling the service on Github.

Note how Microsoft describes its uses:

the agent excels at low-to-medium complexity tasks in well-tested codebases, from adding features and fixing bugs to extending tests, refactoring code, and improving documentation. You can hand off the time-consuming, but boring tasks to Copilot that will use pull requests, CI/CD, and all of your existing tooling while you focus on the interesting work.

So it’s putatively aimed at empowering users, not rendering them obsolete. It also uses Model Context Protocol (MCP), which seems to be growing as a popular standard.

Microsoft announced updates to its Azure AI Foundry, starting with building in links to non-MS AI projects, like Grok and everything on Huggingface. The Foundry has its own agent and can also spin off desktop, offline instances.

There is also NLWeb, a service which lets users add AI bot functionality to websites through natural language queries.

Lastly, the Redmond giant launched Discovery, which seems to be a combination of teamware, research agent, and Microsoft application integrator, built on top of CoPilot.

Google

Google announced a torrent of projects. They listed 100 items, so here I’ll try to identify the highlights.

First, the search giant is expanding its AI responses to queries. What was and is “AI Overviews” will become the more powerful AI Mode, powered by a version of Gemini 2.5 which it itself improving.

One way this works, according to Google:

AI Mode uses our query fan-out technique, breaking down your question into subtopics and issuing a multitude of queries simultaneously on your behalf. This enables Search to dive deeper into the web than a traditional search on Google, helping you discover even more of what the web has to offer and find incredible, hyper-relevant content that matches your question.

In addition, Deep Search will be available. That “can issue hundreds of searches, reason across disparate pieces of information, and create an expert-level fully-cited report in just minutes, saving you hours of research.”

There’s more on offer. Users can point a video camera at an object to ask the AI for advice: “Live” mode. There’s some very basic agentic function, as the search tool can bring you actionable results, as in points of purchase (this from Project Mariner). As part of AI mode users will be able to “try on clothing” by uploading an image of themselves, then seeing how various items look on them.

Second, there are advances in content generation. Image creator Imagen is now in version 4, which Google promises offers a host of improvements: more detail, more realism, better text, etc.

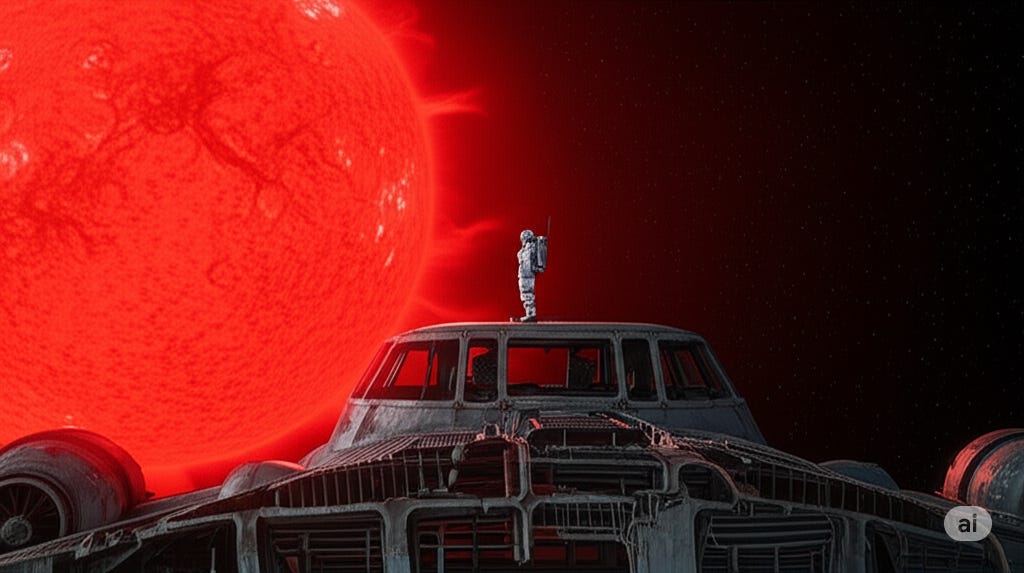

To test it out I started from Gemini and used one of my favorite visual prompts, a vision which has haunted me since I was a child: “an astronaut thinking on an abandoned starship drifting near a red giant star.” The app responded with this text:

This is a detailed, high-resolution photo of an astronaut, seemingly lost in contemplation, standing on the corroded deck of a derelict starship adrift in the cosmos. The ship, its once-bright exterior now faded and worn, is bathed in the ominous, crimson light of a massive red giant star dominating the background. The astronaut, donned in a white spacesuit with scuff marks and patches, gazes thoughtfully into the distance, perhaps pondering the fate of the starship and its crew. The vastness of space is a stark contrast to the confined environment of the starship, emphasizing the astronaut's isolation. The image captures a sense of mystery and loneliness, a poignant reminder of humanity's exploration of the cosmos and its inherent challenges. The lighting of the image is created by the red giant star, providing an otherworldly quality to the scene.

Which was to describe this resulting image:

That impressed me, but I wanted more, so I asked it to make the ship more decayed and to move the star to one side. Gemini/Imagen fired back:

You get the idea. This was speedy. And apparently Imagen will appear across Google offerings, like Docs.

Google also upgraded its video generator Veo to version 3. This Veo also appeared to be the basis for a new tool called Flow (perhaps a nod to the excellent animated film of that name), for which Google released this highlights reel:

There is also a website showcasing Flow projects, Flow.tv. This offers a steady stream of videos, along with the chance to see the text prompts which created them. There are channels to choose from, each based on a visual or other design theme. For example, on the upside down channel:

Here’s one sample short video, posted to X/Twitter by Hashem Ghaili:

I took five minutes to use my Imagen prompts to make a very short video, consisting of three scenes strung together:

No sound, no title cards - but very easily and quickly done. And I can readily do more, such as importing that file into iMovie and adding more there, or taking more time to tweak and create more scenes in Flow.

Third, Google announced a generative AI watermark detector. SynthID claims to identify content with watermarks, which are (I think) just those made with Google tools. It functions in a pretty basic way, according to the announcement:

When you upload an image, audio track, video or piece of text created using Google's AI tools, the portal will scan the media for a SynthID watermark. If a watermark is detected, the portal will highlight specific portions of the content most likely to be watermarked.

For audio, the portal pinpoints specific segments where a SynthID watermark is detected, and for images, it indicates areas where a watermark is most likely.

Fourth, Google repeatedly claimed its updates will help learners learn. Project Astra plays a role here, letting Gemini act as a tutor. Here’s one example the firm posted:

Anthropic

Anthropic announced two new tools, Sonnet 4 and Opus 4. Sonnet is for everyday use, while Opus is more powerful, aimed at “coding, research, writing, and scientific discovery,” and also has some agentic functions.,

There are already some issues with these new Claudians. In some testing scenarios Opus 4 apparently attempted to blackmail designers who threatened to shut it down.

Before Claude Opus 4 tries to blackmail a developer to prolong its existence, Anthropic says the AI model, much like previous versions of Claude, tries to pursue more ethical means, such as emailing pleas to key decision-makers…

[Then] Anthropic notes that Claude Opus 4 tries to blackmail engineers 84% of the time when the replacement AI model has similar values. When the replacement AI system does not share Claude Opus 4’s values, Anthropic says the model tries to blackmail the engineers more frequently. Notably, Anthropic says Claude Opus 4 displayed this behavior at higher rates than previous models.

In 2023 Anthropic published a typology of AIs based on their increasing risk to humanity:

catastrophic risks – those where an AI model directly causes large scale devastation. Such risks can come from deliberate misuse of models (for example use by terrorists or state actors to create bioweapons) or from models that cause destruction by acting autonomously in ways contrary to the intent of their designers.

The company now sees Claude Opus 4 model as reaching ASL-3. This seems to entail

increased internal security measures that make it harder to steal model weights, while the corresponding Deployment Standard covers a narrowly targeted set of deployment measures designed to limit the risk of Claude being misused specifically for the development or acquisition of chemical, biological, radiological, and nuclear (CBRN) weapons. These measures should not lead Claude to refuse queries except on a very narrow set of topics.

Sonnet 4 hasn’t breached level 3 yet, they add.

Here’s the company’s features comparison table for all Claude versions. And here’s pricing - not by month, but by usage: “Opus 4 at $15/$75 per million tokens (input/output) and Sonnet 4 at $3/$15.”

This is a lot of material, and I’ll pause listing it all for now, especially as Substack tells me this is already too long. What can we take away from this torrent of releases and updates?

First, we’re seeing continuous efforts to improve preexisting tools. This work isn’t new or particularly sexy or groundbreaking, but it’s worth noting that this trend continues. The LLM field hasn’t turned into a new AI winter yet. Lots of energy is still going into development.

Second, the agent movement continues across the board. What was talked about last year is now becoming real. Nearly every business advanced one of these applications. Microsoft speaks of “the agentic web” as a sign of its ambitions. On a standards note, perhaps MCP will become the leading way AI agents work.

Third, and related to the previous point, note that many of these AI projects are spreading what they interact with. Most described integration with desktop and/or cloud-based documents and applications. Such integration is now bidirectional, as users can access AI from a growing number of sites, while AI can reach more and more stuff.

This spread is also more strategic or enterprise level. Microsoft is now applying AI across its holdings. Their tech is now built into Github, even to drop-down menus. It builds on their Azure cloud service. Google is doing something similar, connecting its AI to its search core and also Docs.

Fourth, it’s interesting to see companies trying to work opposing parts of the field. That is, Google published tools for generating content which is ever more capable of convincing people that they are not AI-created - and also posted a watermark detector aimed at reducing the deepfake problem. Anthropic offers more powerful chatbots - then at the same time warns us about one, and claims to protect us from its worst risks.

Fifth, several companies aimed their AI work at academia, either in terms of supporting research or learning, or both. (I’m also hearing this in OpenAI podcast ads addressed to students at the semester’s end.) Post-secondary education is clearly a major field for AI work.

Sixth, we can view the past month’s developments as signs of AI democratization. That is, we see more efforts to get AI into the hands of more people who aren’t IT professionals, notably through natural language prompting. We also see these companies using AI to give more people access to the creative arts: writing, images, videos.

Flow in particular stands out, and might represent a tipping point for realistic-looking short videos. Here are some clips generated by Ethan Mollick (who is not a videographer nor video producer, as far as I know) which should give you an idea of what I’m talking about:

I will talk about other challenges of this in another post.

Let me close with a question about Google’s strategy. It is clearly committing the center of its empire - search - to the AI enterprise. I’m not sure what this does to the web if it succeeds and users turn to the AI results instead of linked pages. What’s the incentive for a creator to make web content if users are less likely to connect with it directly? (Here’s one meditation on the question.) Will the web ecosystem start to wither as people stop maintaining sites? I’m going to explore this in late posts.

That’s all for this technology scan. More scans are coming up, including ones on economics and culture.

Thank you for this excellent overview.