Generative AI continues to develop new forms and applications. One of those is AI-generated search, which involves using an LLM-backed service instead of searching the world wide web through Google Search or others. Today I’d like to explore some ways this might play out. The scenario I have in mind is one where we’ll see AI search clobber the web, with LLMs replacing a big chunk of the web ecosystem in much the way tv succeeded radio.

Such a transition is not without precedent. I’ve been tracking possible downturns to the web for decades. While the web exploded across the world from Sir Tim Berners-Lee’s distributed hypertext invention, becoming massively adopted and transforming the world, it has also elicited all kinds of opposition, with people seeking to avoid, outflank, or reduce it. Readers are doubtless familiar with many national governments implementing various strategies to reduce residents’ use of the free and open web, from mandating content filters to manually surveilling content in order to threaten creators. Other entities have imposed similar measures, from schools to businesses, especially for intranets. There have also been other technological platforms aimed at giving users different ways to use the internet other than the web. Apple’s iTunes, for example, and its app store (dutifully copied by Google) neatly avoid web browsers. You can see this as well with podcasts which lack a web presence, or have only a nominal, technical site; they rely on users going around the web to find their materials.

There are also technical and platform structures which use the web, but which encourage or compel users to reduce the technology’s full capacity. For example, some social media platforms punish users for sharing hyperlinks, such as Facebook, Instagram, and X/Twitter. In education the learning management system/virtual learning environment (LMS/VLE) bravely allows outgoing links (a student or professor can link to a webpage), but breaks inbound ones - i.e., users beyond a given class can’t follow a URL into a class post. There are also business and personal practices which have sought to avoid some of the web’s key functionality. Think of mobile apps and websites which refrain from hyperlinking to content hosted anywhere else in the web, or users who simply don’t post URLs. The reasons for such practices are varied, from fears for state power to wanting to keep users on one’s material to not wanting to share competing views.

There’s more to the anti-web story, but you get the idea. Now AI offers another way around the web, ironically enough built upon it. Let’s look at this in stages: changes to technology, then behavior, then economics, then culture. I’ll offer a grim conclusion, then consider some alternatives.

Technology

OpenAI launched a web search function for ChatGPT last year, first as a separate tool, then embedded within the main chatbot interface. You need to click on the “Tools” menu and pull down “Web search,” then you search away. For example, I entered “Gothic literature” and ChatGPT offered this response:

Note the primacy of content, up front and clearly presented. Links are secondary, visually small. The image carousel which appears first does not lead to sources when you click on it, but only yields bigger images.

Compare with Google. If I enter the same term to basic Google search, here’s what appears at the top of the browser window:

First, a stack of books. Clicking on each either takes you to web pages for them, or opens up embedded windows with more links. Second, a link to Wikipedia. So far so URL-centric.

Ah, but below that Google offers some more responses, which only secondarily lead to web pages:

Those are good questions, actually. Yet in the answers which appear areclearly identified links. For example:

Then below *that* we get more traditional search results:

So far, a pretty web-friendly response, giving us many ways to click away for more resources.

Compare those results with what AI Mode* offers:

On the top left, that most valuable screen location, we get a 600-word introduction to the topic. Which is actually pretty decent. Notice how few links there are, and how visually marginal they are, like how ChatGPT presents them.

Then on the right are some links, but very different ones from what basic search yielded. Instead of Wikipedia or academic sites, we get Study.com and a publisher’s page.

Note that this isn’t a sudden jump from full blown web to dead web. What I’m seeing with AI search is one transformation built on top of a varied history of attitudes towards and technological responses to the web. It’s a slide from emphasizing to decrementing links. We can also observe that this isn’t a technological revolution, but the application of an established tech to another one: LLM to web search. It’s what Brynjolfsson and McAfee described way back in 2014 as recombinant technological practice.

Behavior

How might using this emerging interface change how users engage with the web and the rest of the digital world?

We already know from decades of research that search users tend to grab the first few results and run with them. (Some don’t follow any links. A Bain study described that behavior as zero-click.) Similarly, users of other sites tend to focus on what’s on top of a screen and rarely scroll down, much like readers focused on what was on top of the fold for print newspapers. Further, I don’t have good research to back this up, but my sense is that readers rarely look into footnotes and even less often turn to endnotes. If we continue along these behavioral lines we should expect many people to zero in on the AI summaries presented by Google’s AI Mode and ChatGPT’s Web search option. They will click on links less often than they do now.

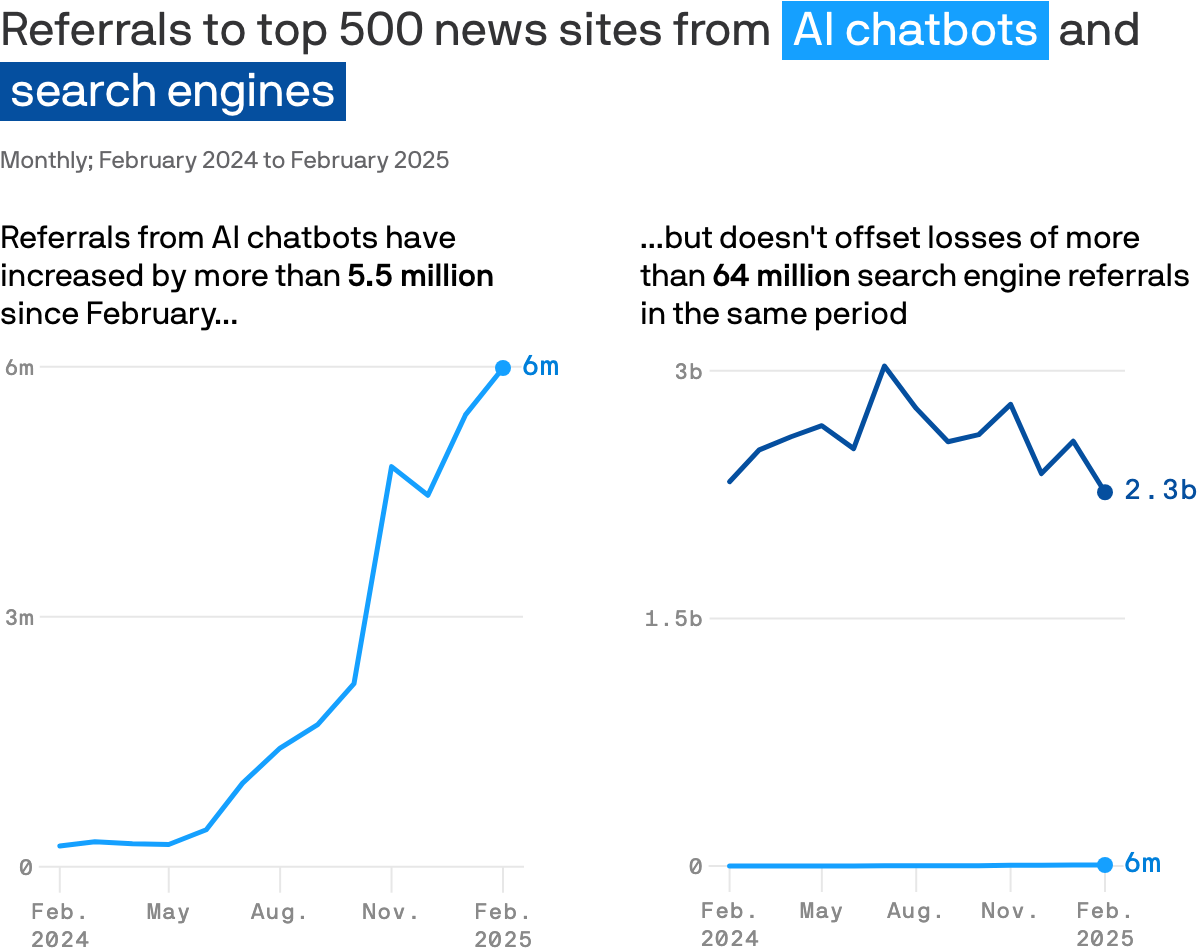

We’re already seeing clear data about this. Back in April one research team found that users responding to Google’s AI summaries (not the full AI Mode) were 34.5% less likely to click through to some other page. Here’s an Axios summary, comparing links from AI to those from search engines:

Pew Research conducted a survey of a small (for them) group (several hundred people) and found much the same. People who used classic web search clicked on links more often than those using AI summaries.

Users who encountered an AI summary clicked on a traditional search result link in 8% of all visits. Those who did not encounter an AI summary clicked on a search result nearly twice as often (15% of visits). [emphasis in original]

Similarweb reports (via The Economist) that human web searching has fallen over the past year:

The same article adds more evidence:

“We had a very positive relationship with Google for a long time…They broke the deal,” says Neil Vogel, head of Dotdash Meredith, which owns titles such as People and Food & Wine. Three years ago its sites got more than 60% of their traffic from Google. Now the figure is in the mid-30s. “They are stealing our content to compete with us,” says Mr Vogel. Google has insisted that its use of others’ content is fair. But since it launched its AI overviews, the share of news-related searches resulting in no onward clicks has risen from 56% to 69%, estimates Similarweb. In other words, seven in ten people get their answer without visiting the page that supplied it.

“The nature of the internet has completely changed,” says Prashanth Chandrasekar, chief executive of Stack Overflow, best known as an online forum for coders. “AI is basically choking off traffic to most content sites,” he says. With fewer visitors, Stack Overflow is seeing fewer questions posted on its message boards.

In other words, the decline seems to be under way now.

I asked ChatGPT about this and the results were… illustrative:

Classic search (Google, Bing, DuckDuckGo) functions primarily as an index: it returns links in response to keywords. The burden of synthesis—clicking, reading, comparing—falls on the user.

AI search (e.g., ChatGPT's web browsing, Perplexity, Google's SGE) offers direct, synthesized answers to natural language queries. This changes:

User behavior: Less clicking, more asking. People are trained to expect instant, authoritative-seeming answers.

Cognitive expectations: Users may skip critical appraisal, assuming the AI has already done that work. [emphases in original]

It also supplied this helpful infographic:

(“Devours” is my word, entered in the original query.)

Economics

How might such a behavior shift, driven by technological change, alter the economics of web publishing?

If people follow links less often we should expect fewer visits to websites. This is bad news for any enterprise depending on web traffic for income. AI search means fewer hits to ads, fewer incoming people who might buy something from a website, fewer new subscriptions and Patreon sign-ups. A reduction in inbound links can vitiate the economic bases of some websites.

People have been sounding the alarm about this recently. For example, here’s from Wired magazine, describing their strategic rethink:

[N]ow Google Search is dwindling as the company reorients users to rely on AI Overviews instead of links to credible publishers. More and more users are also skipping Google altogether, opting to use chatbots like ChatGPT or Claude to find information they once relied on news outlets for.

Elsewhere, Cloudflare’s CEO Matthew Prince told an audience that the number of users hitting non-AI sites is falling sharply:

Ten years ago, Google crawled two pages for every visitor it sent a publisher, per Prince. He said that six months ago:

For Google that ratio was 6:1

For OpenAI, it was 250:1

For Anthropic, it was 6,000:1

Now: For Google, it's 18:1

For OpenAI, it's 1,500:1

For Anthropic, it's 60,000:1.

His summary was stark: “People trust the AI more over the last six months, which means they're not reading original content… The future of the web is going to be more and more like AI, and that means that people are going to be reading the summaries of your content, not the original content."

For those who publish web content purely from passion and without economic benefits, a reduction in traffic might depress them psychologically. That means fewer people paying attention to one’s work, fewer folks engaging with it.

It seems likely to me that we’ll see less web content produced. This could take the form of an incremental reduction, like fewer posts to a blog. It might also lead website owners to pull the plug on pages or whole sites which start to look like losses, even dead ends. The number of new web content creators may well start to decline. Overall, the web reaches a peak, then declines.

Culture shift

The decline of the web will encourage further shifts in how we use the digital world in general. We should expect greater use of AI, at least in this form, where people use AI search instead of classic Google plus following links to web pages. AI becomes central to our information use as we turn to it for all kinds of purposes previously served by the web: answering questions, checking data. We would use Gemini etc. to check for medical information, with “Dr. Google” giving way to “Dr. AI.” For shopping AI becomes a new intermediary, a recommender, a filter, serving up a precious few links - and vendors might pay well for those scarce positions.

Creators would shift to platforms beyond the web, where being found through Google Search and SEO don’t matter very much. Podcasting, for example, could grow even further as users tend to look for programs through podcatchers. Some platforms could become even larger when people search within them, such as email newsletters or Medium. That Wired announcement I cited earlier culminated in a call for us to sign up to their newsletters and video streams. Virtual, augmented, and extended reality are difficult to discover through Google Search, yet are findable within platforms and interfaces in those spaces, especially locked into hardware, so we might make more stuff for those realms.

Axios refers to this as “Google Zero doomsday” and helpfully lists new business models publishers are trying out. For example, Bustle Digital Group is making quite a shift: “Instead of being a website that publishes stories, we’re now basically an events company.” Axios’ conclusion: “Publishers are playing defense, building their own destinations and weaning themselves off platforms that use their content as training data. The goal is to own the audience connection and no longer be vulnerable to algorithmic and other platform shifts.” The Economist calls for the rapid creation of new business models.

Beyond publishers’ sites and strategies likes social media for everyone. Social media relies to an extent on the web, but mobile devices are a popular way into that world, especially through dedicated apps. We might see social media follow Facebook, Instagram, and X/Twitter in becoming ever more “internal,” less likely to support links elsewhere. Given how widespread social media use is, people get more habituated to not seeing or making hyperlinks. Maybe we’ll spend more time on Wikipedia and Reddit as AI search favors them.

How might our approach to information change? Right now we have access to a vast amount of content, broken out by sometimes competing platforms, shaped by filter bubbles and algorithms. Would AI collapse this into centralized authorities, with Google, ChatGPT, etc. becoming the major nodes of trust? ChatGPT thought so:

Epistemological Rewiring: Trust and Authority

Classic search allows pluralism—users can compare multiple sources and voices. AI, in contrast, often:

Collapses plurality into a singular synthesized voice.

Obscures sources and bias, unless prompted or designed to cite transparently.

Centralizes authority in the model’s architecture and training data.

This alters how knowledge is encountered:

What is “true” becomes what is most confidently stated by the model.

Marginal, dissenting, or nuanced views risk being flattened or excluded.

I suspect that if and when this occurs, we’ll generate competing authority bots. As I’ve said for years, and has started to happen, different entities will want to offer their own takes on the world: nations, religions, companies, etc.

In education, we might see a decline in pages academics produce simply to get good information out in the world. That is, fewer web pages about Euclid, Mary Shelley, Vietnamese poetry, quantum mechanics appear. Existing pages suffer from link rot at an accelerated rate.

I wonder what happens to the LMS/VLE world, which already offer a cut-down version of the web. Will educators and students share links less often, as a result of their experience elsewhere? Will in-LMS AI (for example) soak up some of those formerly web-based habits? Or will students prefer an external AI to what an LMS serves up?

How might this change the world of information literacy? Librarians have taught Web skills for decades and are now teaching how to best use AI. Will the former appear less prominently in info lit curricula and the latter become central? AI might be the core site of digital engagement, then all internet services - including the web - follow.

Alternatives

Futuring work is always about possibilities, in my practice. We offer forecasts based on evidence and models, but as with weather there is plenty of room for reality to offer different outcomes. What I’ve described here is based on evidence but also relies on many contingencies. How else might this “AI search devours the web” turn out?

In conversation author and government professor Tom Haymes thought of a more optimistic scenario. In his view AI enables and accelerates human creativity, so we would produce more, not less, web content. As we are social beings, we’ll find ways to connect and share. We might use AI search to connect or else work around it.

Perhaps we will turn to web search without AI. DuckDuckGo could rebrand itself as AI-less, or the human search service.

Alternatively, AI search could simply flop. Right now AI search represents a small fraction of digital behavior. (This Washington Post article is very skeptical.) It might not grow. Enough people might decide that LLM content is too unreliable, too politically unsuitable, too culturally insensitive, too commercial, etc., to trust and will head back to classic web search. Google might not figure out how to monetize AI mode and gradually ends it, as that company is fond of doing. There have been other efforts to compete with web search, like the unlamented Knols (speaking of Google killing its projects). When envisioning the future it’s important to think of ways things might not occur.

A variation on this involves a more benevolent Google, which turns up the links its AI Mode serves up. It has an incentive to keep the web healthy, at least for data crawling and for servicing some ads.

A third option comes from an interesting Quartz article, which suggests we might see websites split - or rather, double, setting up AI-pointing duplicates:

some companies are starting to draw a line — literally. They’re building two versions of their websites. One is designed for humans, with visuals, interaction, and branded storytelling. And the other is stripped down, optimized for machine readability, and engineered to feed the AI beast without giving away (so to speak) the crown jewels.

What might that look like?

“For a human, your site should be rich, interactive, delightful,” Tong said. “For a bot? You want clear structure, easy crawlability, but maybe not your full content.” Some publishers are now exposing just a summary or excerpt to crawlers, hoping to bait the index without cannibalizing their monetization model.

The result is a quietly splitting internet: one version designed to delight human users, and another engineered to feed crawlers only specific content with the aim of protecting the value that’s still worth a click.

One more alternative is legal or governmental action. Lawsuits against LLM companies could still cripple those enterprises, as I’ve been warning. Governments could roll out licensing schemes to support content industries, such as requiring AI scrapers to pay into a fund which pays for web content creation.

This post has become longer than expected, as seems to be a habit for me on Substack. Once again the platform warns me this was “too long for email” so I should draw it to a close.

What do you think of my grim scenario? Do you see AI search clobbering the web, or is there a way around that future?

great post! thanks!

Potential long comment warning. There are some hairs to split when talking about "search" is one thing. Searching for specific facts or answers differs from searching when researching differers from what I most enjoy, just looking to explore. For the latter, I get more out of results beyond the best, things I would not even consider or just what pops up as curious. GenAI removes serendipty.

Also the concerns seem focused on impact of what people now rely on from search, to get more clicks on their stuff. You did touch on this in literacy but I see a dramatic decrease in understanding fundamental basics of what URLs do. I remember when Jon Udell spoke about the literacy of reading and fiddling with parameters in search results. Since modern web tools with visual editors remove us from the HTML soul of the web, I see people now knowing at all the link they are sharing is encrusted with UTM tracking tags.

Or today, on a web form, I see people submitting 50 mile long URLs generate by Outlook the stuff that starts with namo2.safelinks.protection.outlook.com. We have lost the wide understanding of how the web works.

And looking at the links most shared in socials as a reference are often reports or summaries from things written in online news sites that exist to attract clicks and spit ads, but also finding links share to research going to a university news post. We have lost the Mike Caulfield SIFT sensibility of going upstream to the sources. Each time I go upstream to find the best link, I invariably discover more of interest tangentially.

I relish doing my own analysis what to read, and in general feel like we are dumbing down society by replacing that mental exercise with the gray goo summarizing of AI. I glance at them, and I might grab and go if its something as mundane as a simple fact, but I'm going to keep John Henrying my own searching and not takling what the vending machine spits back.

While the bemoaning of the web can be laid at the enhittificating feet of the usual big entities, much also has been lost because we have reached for convenience of media streams to inform and packaged systems to make our web stuff. Web weaving as a craft is passé. To me searching for just pat answers is process if diminishing returns over the long run.

But hey, I'm an outlier. I'm outside the statistical mean of GenAI-ville. It's so much more interesting here. Thanks for letting me vent in your post ;-)