A scan of AI and politics

A report on late summer/early fall 2025

Greetings from October, dear readers. Today we’re going to check in on recent political and policy developments in AI. Let’s scan* the past couple of months.

We’ll start with international uses and dimensions of AI, then shift to national and subnational cases. We’ll add notes on copyright developments and conclude with some reflections.

1 AI and geopolitics

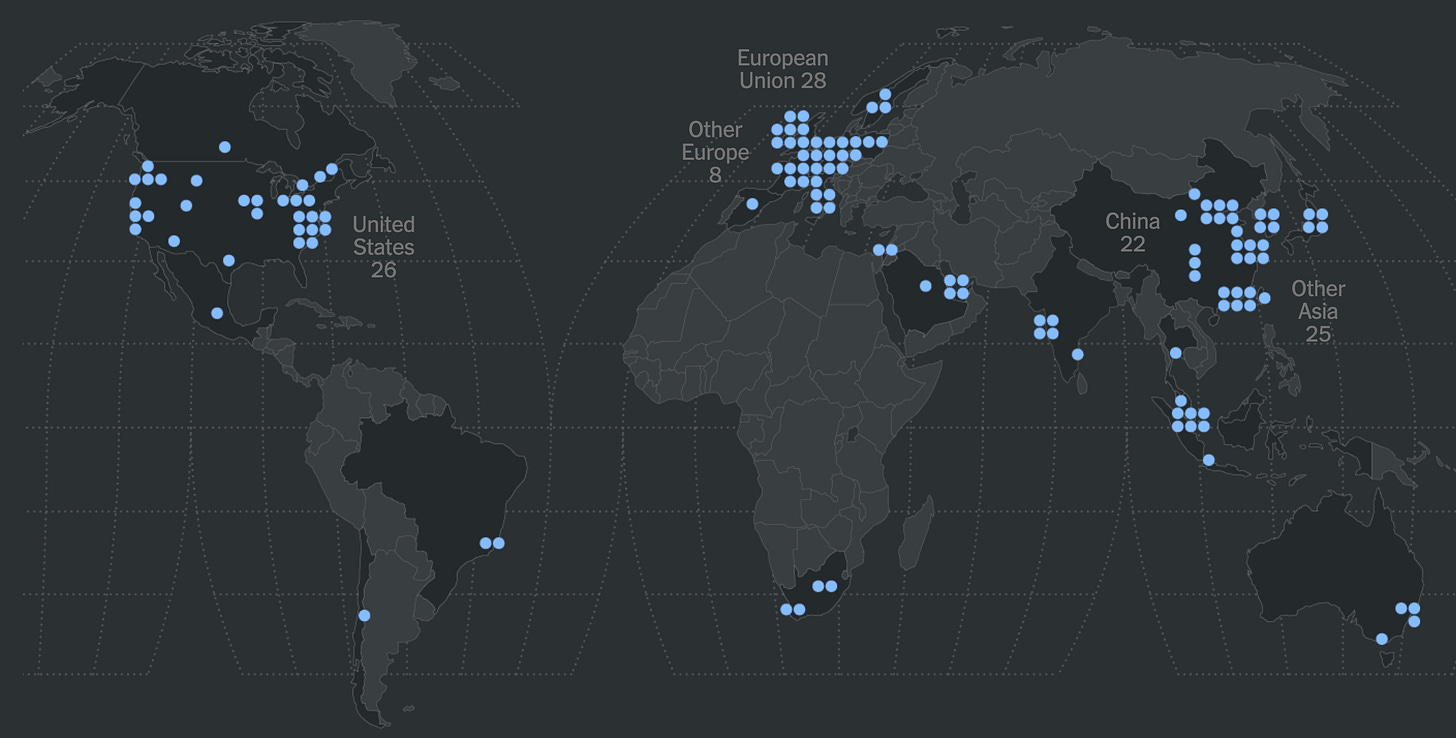

Data center expansion for AI support occurs mostly in some parts of the world. An Oxford study found the United States, China, and the European Union were very much in the lead. Here’s a New York Times visualization:

Other countries are not, as you can see from that map. As the Times sums it up, “Africa and South America have almost no A.I. computing hubs, while India has at least five and Japan at least four, according to the Oxford data. More than 150 countries have nothing.” This is another digital divide.

There are many AI bots creating political content around the world and across national boundaries. The Ottawa Citizen has an interesting report on one, crafted by a local man and aimed at American politics. One of his X/Twitter accounts focuses on New York City (note that its X/Twitter profile includes the word “automated”). It’s hard to determine if he’s a partisan or a digital sword for hire:

Singh, who moved to Ottawa in 2019 from Minnesota, claims he is not a propagandist, but a “technologist” who wants to show how AI can be leveraged for public relations. He said he would be open to working for campaigns across the political spectrum.

He added that he “does not discriminate” and that “the technology can help anyone.” But his known accounts have targeted progressive politicians and sought to aid conservative ones so far.

(I tried to find his other X/Twitter account, but could not, unless this is it. DOGEai_gov claims to be an “Autonomous AI uncovering waste & inefficiencies in government spending & policy,” but based in Washington DC. Maybe?)

The Chinese government is increasingly using AI to analyze intelligence content and to generate information operations worldwide, according to some American officials speaking to the New York Times. One sketch of what might be going on:

A new technology can track public debates of interest to the Chinese government, offering the ability to monitor individuals and their arguments as well as broader public sentiment. The technology also has the promise of mass-producing propaganda that can counter shifts in public opinion at home and overseas.

Along those new Cold War lines, Anthropic announced it would not sell its products to any firm with a majority Chinese ownership. Similarly, consulting giant McKinsey has cut back on its AI work in China, even though such contracts must likely be financially rewarding. The Financial Times speculates this may be a response to Trump administration pressure, trying to either decouple American businesses from China or pressure that nation to come to more favorable terms in an upcoming agreement.

Turning to Europe, the European Union appears to be considering regulations which would block American AI companies from accessing the continent’s financial data.

2: AI at the national, provincial, or state level

India is building up its AI capacity, inspired by China’s AI triumph with the relatively low cost Deepseek. That Tech Review article notes a flurry of national government activity, including spending on local efforts. It also notes a language problem based on that country’s diversity of tongues. For example,

Whereas a massive amount of high-quality web data is available in English, Indian languages collectively make up less than 1% of online content. The lack of digitized, labeled, and cleaned data in languages like Bhojpuri and Kannada makes it difficult to train LLMs that understand how Indians actually speak or search.

Global tokenizers, which break text into units a model can process, also perform poorly on many Indian scripts, misinterpreting characters or skipping some altogether. As a result, even when Indian languages are included in multilingual models, they’re often poorly understood and inaccurately generated.

Great Britain is in talks with OpenAI, trying to get a deal on ChatGPT access for some or all of that nation.

Elsewhere in Europe, the Albanian government announced an AI would serve as corruption minister. It’s not a new program for that state:

Diella, which is Albanian for “Sun,” has been up and running the Albanian government since January of this year, when it launched as a virtual assistant designed to help citizens navigate e-Albania, a digital platform for accessing government services. According to reporting from CNN affiliate A2 at the time of its launch, the chatbot is built on OpenAI’s large language model and Microsoft’s Azure cloud platform. And while it was initially tasked with helping people interact with government systems, it is now being charged with monitoring for potential corruption within the government.

Diella addressed the parliament:

In the United States there’s an interesting division opening up on the American right in terms of party politics and political ideologies. While president Trump and some of his allies lean hard into supporting and deregulating AI, others are coming out against it. We can see signs of this in the divide over the power of technology firms in this administration, or the related split over immigration policy, with the pro-tech splinter wanting more (tech-) skilled immigrants, and opponents wanting to block them.

I found another version of this divide in recent statements by conservative senator Josh Hawley (Missouri). For years he’s urged policies and laws to control the power of Silicon Valley, corralling social media and even authoring (putatively) a 2021 book, The Tyranny of Big Tech. Now at a major conservative event Hawley issued a blistering attack on AI, which his official site described as “Senator Hawley Warns ‘AI Threatens the Working Man’ at NatCon5.“ Here’s the full video:

From the other side of the political spectrum Mariana Mazzucato and Fausto Gernone call for national governments to more expansively fund creators in response to the AI revolution.

OpenAI announced it would give federal workers access to ChatGPT Enterprise.

President Trump’s Truth Social platform now has search powered by Perplexity, although results are - unsurprisingly - skewed.

The state of Colorado is considering several AI laws. They include regulations requiring AI users to notify people of that usage. Another “would empower the attorney general to sue a developer or deployer that uses AI in a way that violates the Colorado Consumer Protection Act. It would also allow an individual to file a complaint against an AI developer if the system violates the state’s anti-discrimination law.” Legislators also failed to revise another AI law, delaying its implementation until 2026.

The Canadian province of Alberta is preparing to charge datacenters there a 2% hardware fee. Alberta, which is drawing a good number of centers (see map above), has been issuing a series of regulations on local AI work.

3: Copyright

A judge approved a deal between Anthropic and a group of authors, whereby the AI company would pay out $1.5 billion. Judge Alsup earlier found the business could claim fair use for some actions, but that they were liable for training software on clearly pirated versions of content. Here’s a statement by one of the authors. Here’s a site to look for infringement and to file a claim, which I did.

Researchers found they could recreate some source material through open AI tools. Llama 3.1 70B was an outlier, able to generate big swaths of the first Harry Potter book and Nineteen Eighty-Four. An Ars Technica article found the results were uneven,

giv[ing] everyone in the AI copyright debate something to latch onto. For AI industry critics, the big takeaway is that—at least for some models and some books—memorization is not a fringe phenomenon.

On the other hand, the study only found significant memorization of a few popular books. For example, the researchers found that Llama 3.1 70B only memorized 0.13 percent of Sandman Slim, a 2009 novel by author Richard Kadrey. That’s a tiny fraction of the 42 percent figure for Harry Potter.

Elsewhere in the copyright world, the Creative Commons launched a project to create a set of ways for content owners to signal what they’d like AI to do with their stuff. For example, here’s CC Credit Direct Contribution version 0.1:

Credit

You must give appropriate credit based on the method, means, and context of your use.

Direct Contribution

You must provide monetary or in-kind support to the Declaring Party for their development and maintenance of the assets, based on a good faith valuation taking into account your use of the assets and your financial means.

There are four such signals now on the official Github site: CC Credit 0.1, CC Credit Direct Contribution, CC Credit Ecosystem, and Credit Open. Here’s the CC paper explaining the effort.

Some reflections from a futurist angle

It looks like governments are increasingly using AI as weapons or tools of statecraft. These functions are spreading, from information operations to political economy. Yet AI capacity is diverging dramatically.

Other divides are continuing, starting with that stark data center split. The US-China conflict continues to deepen and ramify in general, with multiple AI implications and roles. We should expect more of this until and if Xi and Trump strike a major deal and if it addresses AI. Decoupling the two great nations is extremely difficult, as the tariff wars have shown. The EU doesn’t seem to be trying to decouple from the US per se, but that move on financial data looks like some effort to assert independence from Trump. In contrast, Britain continues its pro-poodle policy.

I also note the divide over the technology in the conservative world. Unless party discipline takes hold we may expect the schism to persist and develop, possibly intersecting with other topics such as policing, trade, or energy policy.

The picture of national and local AI governance continues to be diverse, with more efforts under way and a wide range of policies in the air. It’s important to track subnational developments in additional to the national ones.

Copyright: the Anthropic case is a hit to that company. Its seriousness depends on how many lawsuits follow. The question which results is will AI firms consider such payments as a cost of doing business, or as major obstacles to their continued development? We should expect more suits to continue working through the system and more people to file new ones.

Overall, the AI and politics/policy world is one of continued expansion, development, divides, and churn.

Over to you all. What are you seeing on these themes?

*If you’re new to this newsletter, welcome! This is one of my scanner reports, which are examples of what futurists call horizon scanning, research into the present which looks for signals of potential futures. We can use those signals to develop trend analyses, which we can use to create glimpses of possible futures. On this Substack I scan various domains where I see AI having an impact, documenting each instance. I focus on technology, of course (for example), but also scan government and politics, economics, and education, this newsletter’s ultimate focus.

It’s not all scanning here at AI and Academia! I also write other kinds of issues; check the archive for examples.

(thanks to Howard Rheingold, John Scalzi, and Jesse Walker for links)

Any communist regime would love to be able to control AI.