Greetings, all, from a bizarrely freezing Washington, DC area. I hope those of you hit by this global weirding, as well as those in the more traditionally frozen north, are staying safe and warm.

Today I wanted to fire up my scanner and share some notes on how higher education is engaging with AI as 2025 kicks off. I’ll start with a personal observation, then hit up a bunch of stories and developments.

I’ve broken things up into broad categories this time: big developments outside of education; K-12; higher ed.

1 The bifurcation is hardening

Over the past few months I’ve seen a divide over AI in academia deepen. (When I say “I’ve seen” this means, among other things, reading a lot of scholarship, journalism, and social media, as well as conversations with academics around the world, interactions with my students/faculty and staff colleagues, and people in the various things I’ve been doing: presentations, workshops, webinars, the Future Trends Forum, etc.) At one event a professor said “the bifurcation is calcifying” and there’s a lot to that.

On the one hand there’s a strong push for AI in colleges and universities coming from a range of campus people. Some senior administrators have expressed support, as have faculty members. There is curiosity, still, across the board, as well as a sense of duty, that students will need to know AI for the job market.

On the other, there’s opposition to AI in higher ed with a range of views under that header. Some think we accept the technology too uncritically and recommend critical AI approaches and studies. Others argue that AI has various deleterious effects on the academy, and so want to block it, or at least give faculty the option to not use the stuff. (For more about the substance of critiques check out Maha Bali’s post or mine.)

This bifurcation takes various forms, including research, published scholarship, and teaching. It also seems to be starting to take hold in campus politics and operations. I’ve heard from some academics who are trying to advance AI use at their institution running into opposition from colleagues. Some folks are asking their campuses to change or set policies allowing for anti-AI practices.

There’s a *lot* to say about this, but for now I wanted to note the rising trend.

Now, to more items:

2 Pushes and projects outside the academy

A global AI knowledge initiative begins The International Telecommunication Union (ITU) announced at Davos an AI skills coalition.

The AI Skills Coalition spearheaded by AI for Good under the AI for Good Impact Initiative, will serve as the world’s most trusted and up-to-date platform for delivering AI skills, knowledge, and expertise.

A key focus of the initiative is to address the need for AI skills development of IT professionals, particularly in developing and Least Developed Countries and with a special focus on women and girls, to equip them with the tools and knowledge to support their governments, companies and organizations to leverage AI to foster sustainable development and establish solid AI governance frameworks.

It’s early days and there’s very little discussion yet.

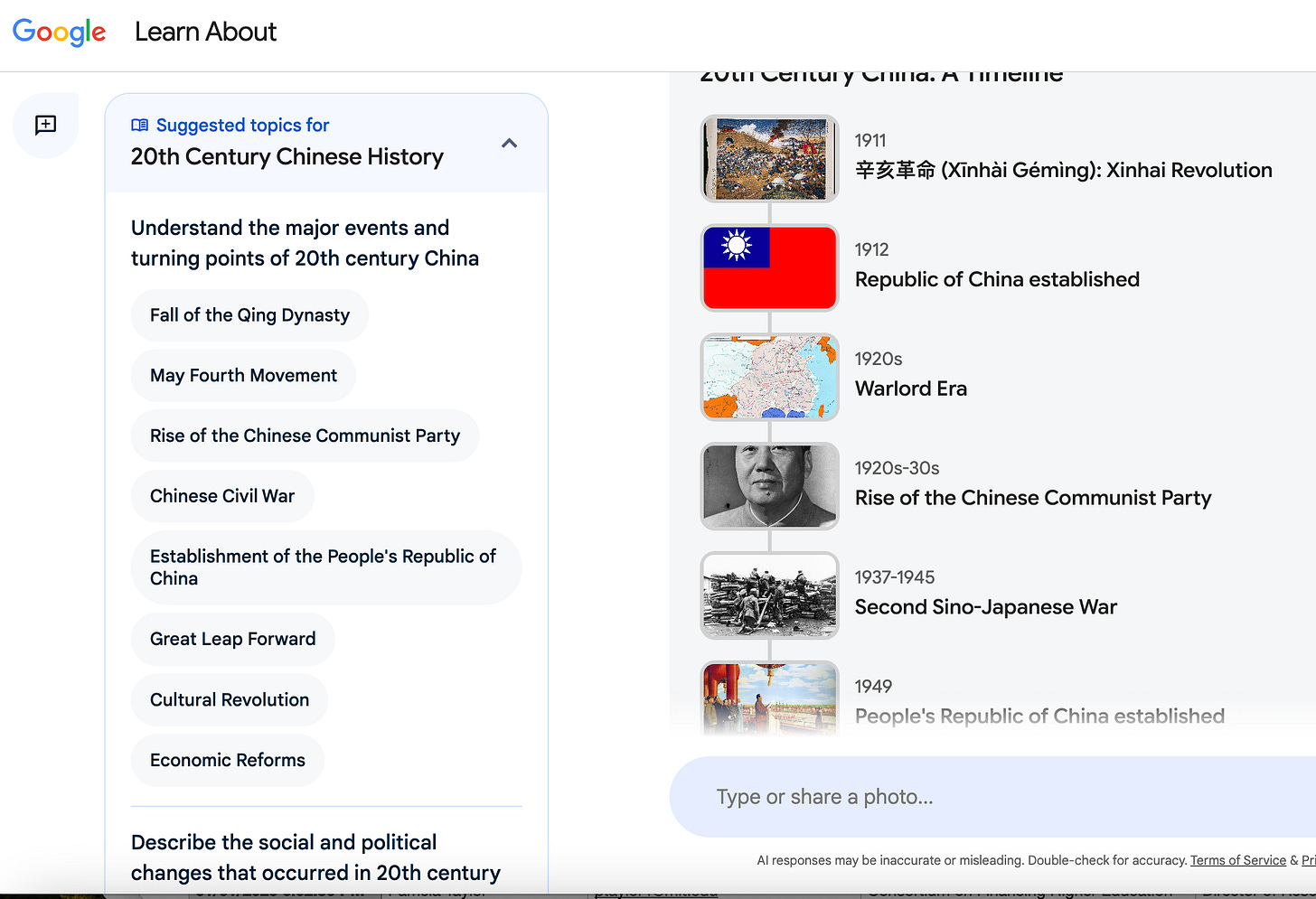

Google launches a conversational teacher bot Learn About is a conversational bot which encourages questions about topics to study. Learner questions bring up a group of responses, including text summaries, visuals, references, and suggestions for followup questions:

3 In K-12

A federal court ruled that a Massachusetts school district was within its rights to punish a student for submitting AI-generated content as his own. without approproval.

Meanwhile, across the nation, an AI-led school gears up. Arizona’s Unbound Academy will open soon, a charter school, structured around AI leading instruction. I said K-12, but it’s starting with grades 4-8. Here’s Unbound’s charter application form.

Some details:

The curriculum will utilize third party providers—such as online curriculums like IXL or Math Academy, among others—along with their own apps, including the AI tutor, which monitors how students are learning, and how they’re struggling.

Meanwhile, teachers—known as “guides”—will monitor the students’ progress. Mostly, the guides will serve as motivators and emotional support, said MacKenzie Price, a cofounder of Unbound Academy.

A London college teaching high school and higher ed announced AI-led classes.

On the American west coast, OpenAI launched an online class teaching K-12 teachers how to us AI. This article shares comments from teachers who are skeptical.

One AI instance attempted to imitate Anne Frank, but was apparently trained to be too cheerful.

4 Into higher education

A University of California-Los Angeles comparative literature class is using AI

Comp Lit 2BW will be the first course in the UCLA College Division of Humanities to be built around the Kudu artificial intelligence platform. The textbook: AI-generated. Class assignments: AI-generated. Teaching assistants’ resources: AI-generated.

Here’s some of the proposed class design:

“Because the course is a survey of literature and culture, there’s an arc to what I want students to understand,” said Stahuljak, a professor of comparative literature and of European languages and transcultural studies. “Normally, I would spend lectures contextualizing the material and using visuals to demonstrate the content. But now all of that is in the textbook we generated, and I can actually work with students to read the primary sources and walk them through what it means to analyze and think critically.”

Because Stahuljak can focus on those aspects of teaching during lectures, TAs would in turn be liberated from those tasks and can instead devote more time to helping students with writing assignments — an element of instruction that sometimes receives short shrift in large classes, she said.

In contrast, a University of Tennessee project poisons AI scrapers trying to train on digital music.

The University of Minnesota expelled a PhD student, charging him with cheating using AI on a preliminary exam. The (former) student fired back, criticizing the detection process, the disciplinary process, and also the professors involved.

Bloomberg offers a snapshot of just how bad AI detectors are.

Back to the west coast, a federal judge dismissed a researcher’s statement because AI generated some of it. Hilariously, the expert’s speciality was misinformation.

France’s Sciences Po returned to entrance exams, in part to reduce AI cheating.

A group trying to increase AI use for student success and admissions received nearly $200 million.

On the research side, New York state invested in computing hardware for AI. A research team used generative AI to build knowledge about historical texts.

What can we learn from this scattering of stories and thoughts?

Overall, generative AI is still growing. Companies are pushing it into education and some educators and others seek to pull the tech inside for various uses. Meanwhile, the resistance is firming up.

We don’t have a handle on cheating at all.

(thanks to George Station and others for links)

That _Corpus-wide machine learning analysis of early modern astronomy_ is an amazing example of re-constructive utility AI is now bringing to scholarly fields.