Yesterday the board of OpenAI, makers of ChatGPT and DALL-E, fired their CEO, Sam Altman.

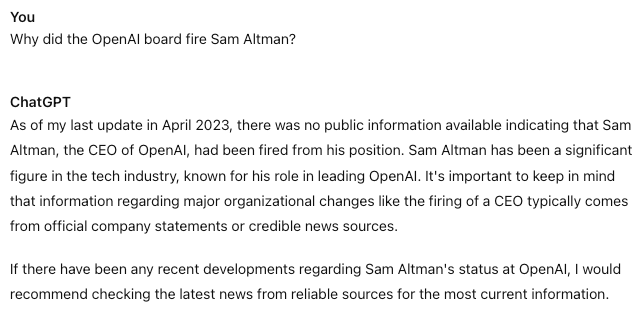

I’d like to summarize the story, based on what little information is out there, then discuss what this might mean for the future of AI in higher education. I’d like to invite readers to share any news they’ve come across or ideas they’ve had. And I will also add comic bits for cheer, like this one:

This firing has come as a shock. Altman had become for many the public face of generative AI. Since the spectacular launch of ChatGPT-3 a year ago he had appeared all over the media, testified before the United States Congress, met with national and business leaders around the world. Several days ago he presided over the first OpenAI developers’ conference, which issued all kinds of impressive releases. So why fire Altman now?

The official announcement was pretty vague. The key passage is this:

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

On which topic did he fail to communicate? They did not specify nor elaborate.

Further, apparently the board did this without telling anyone at Microsoft, who owns 49% of OpenAI.

Immediate fallout developed yesterday and last night. The OpenAI board appointed chief technology officer Mira Murati to be temporary CEO while they search for a permanent one. In response, president and cofounder Greg Brockman quit. Then three OpenAI staff also quit, apparently in response to the firing:

Jakub Pachocki, the director of research at OpenAI; Aleksander Madry, the head of a team that analyzes AI risks; and Szymon Sidor, a researcher who had been at the firm for seven years, told associates at OpenAI that they had quit, The Information reported.

(That Information article is paywalled)

So what happened? Speculation, leaks, and statements are swirling, so I’ll try to identity as many as I can here, at least the ones which sound plausible:

-A struggle over Altman’s aggressive leadership style, which some saw as being bad for user safety, and driven by ego. That might be consistent with the argument in a memo from OpenAI’s chief operating officer:

We can say definitively that the board's decision was not made in response to malfeasance or anything related to our financial, business, safety, or security/privacy practices. This was a breakdown in communication between Sam and the board.

Perhaps this spilled over into interpersonal problems.

-A cultural split over for-profit versus non-profit directions. Recall that OpenAI is nominally a non-profit. Last night Kara Swisher shared what she was hearing:

…as I understand it, it was a “misalignment” of the profit versus nonprofit adherents at the company. The developer day was an issue.

Sources tell me that the profit direction of the company under Altman and the speed of development, which could be seen as too risky, and the nonprofit side dedicated to more safety and caution were at odds. One person on the Sam side called it a “coup,” while another said it was the the right move.

Bard offered this take: “Altman was reportedly more interested in pursuing commercial opportunities for OpenAI's technology, while the board was more focused on ensuring that the company's technology was used safely and responsibly.”

(Meanwhile, here’s what OpenAI said to me last night:)

(I also like Jack Raines’ silly ChatGPT effort:)

Back to possible reasons:

-Altman was doing some arrangement or side deal on his own, and the board felt he left them out of the loop.

-Altman overstated OpenAI’s financial standing, and the board wanted someone more realistic.

-Perhaps related: Altman overstated ChatGPT and DALL-E’s safety, especially after Microsoft briefly blocked its workers from using the apps on Thursday.

-Sam Altman’s sister, Annie, accused him of sexually abusing her. Perhaps the OpenAI board was running a quiet, internal investigation on this and it concluded, or there was some other development on this score.

-…or it’s something we can’t yet discern from the broader world beyond OpenAI’s worldlet.

Again, take these theories with a grain of salt. Much of the conversation is speculation, mixed with rumors, people spinning their positions, leaks, and competitors jockeying for advantage. It’s also early in the story and many people have reasons for keeping their cards close to their respective vests.

Yet the OpenAI action remains a major story because of the huge importance of ChatGPT and DALL-E. Those are leading players in the generative AI world, and chaos at their top has implications for the whole.

If we take a step back and try to organize views, I can break them into three schools of thought:

This is all a personnel matter internal to OpenAI, focused on something Altman did or didn’t do as an individual CEO. There are no implications beyond the handful of people involved and perhaps OpenAI’s reputation.

This actually concerns OpenAI and its suite of products. They might be too dangerous and/or unsustainable and/or have another problem.

This story really involves generative AI as a whole. Financial, security, and institutional problems face every actor in that field, not just Altman’s former employer.

So what does this mean for academia and AI’s future?

If #1 turns out to be the case, very little. Unless the leadership, ah, transition yields instability in OpenAI and its services, which could cause problems for users and apps which rely on them. (There might also be factions which keep following the story, either fans of technology leaders or critics of techbros.)

The possibility of spillover damage is even more salient if the truth lies in bucket #2. If OpenAI is in financial trouble it might take desperate creative measures which impact services, or face closure. If safety and security issues are out of control (which they would be, if the CEO wasn’t minding them carefully) then we again face service restructuring and, perhaps, a financial blow.

Category #3 fascinates me, and I’ve been tracking it for more than a year. I’m not sure we have a working business model for generative AI yet. OpenAI is selling licenses and charging for data, but it’s not clear that’s actually turning a tangible profit. Google and Microsoft are infusing LLMs into their products, but is that actually encouraging more paying users? In other words, is the OpenAI board panicking about being able to make their applications work in the marketplace? Again, we don’t know enough to answer those questions, but they’re ones we should ask. (Freddie DeBoer posed a related query earlier today.)

For higher education, I think the implications include:

The importance of watching generative AI very carefully. This is probably what many folks are already saying or hearing, but to reiterate: the field is capable of a great deal of flux. An institutional team with multiple perspectives and information flows is a fine idea.

The possibility of a leading AI tool rapidly changing, becoming unreliable, or ceasing to exist.

The unreachability of OpenAI’s leaders - when some of us depend on the services they provide.

Considering other AI firms, notably Google and Microsoft (although the latter uses OpenAI), because they look more stable and less bizarre to deal with.

Taking open source AI more seriously. That cuts out problematic middlemen, albeit with other costs.

The possibility that the generative AI world as a whole is unstable, and we need to consider our engagement with it very carefully.

NEW DEVELOPMENTS AS I EDITED THIS SUBSTACK: According to one report, "The OpenAI board is in discussions with Sam Altman to return to the company as its CEO." Or, as Ars Technica puts it, “OpenAI board attempts to hit “Ctrl-Z” in talks with Altman to return as CEO.” Perhaps, as one Mastodon user put it, “I think the $10B Microsoft gorilla entered the board room and started throwing some S*#@ around.” Meanwhile, other sources say Altman’s already revving up new projects which don’t involve OpenAI. So perhaps this is more smoke and mirrors, spin and leakage. As of this writing the OpenAI coup leadership overhaul still stands.

Last note: all of this is very opaque, and that’s not a good thing for academics nor for humans in general. If we are racing headlong into a new AI revolution, we need much more transparency than this. We can’t have the leading LLM enterprise acting as a black box. We shouldn’t have to plan kremlinologists or celebrity gossip fans to understand how generative AI is provisioned. Overall, we shouldn’t continue like this.

I’m more inclined to work with open source tools than ever.

(thanks to all kinds of friends for bouncing ideas and stories around with me; you know who you are)

Thank you!!!

Thank you for this lucid overview of possibilities and implications! Much appreciated sense-making so we don't feel so blown about by the soap opera. And thank you for emphasizing how much we need transparency around AI governance.