What’s happening with AI and higher education? Which signs of the future can we detect in the present?

Answering those questions is a key part of my research, and I like sharing that research in the open. It’s one way of being transparent and contributing to conversations and knowledge, plus I benefit from feedback. I fired up one of these scanner posts in September (shoutout to JNSKJA) and a followup is overdue.

Here’s what I have been tracking over the past few weeks:

Let’s start with technological developments.

AI speech translation for government purposes A municipal team produced audio recordings of New York City mayor Eric Adams speaking, but translated by AI into languages Adams doesn’t speak. He defended the move as a way of better connecting with constituents. Critics of the move (this being New York City) slammed it for being fake, an electioneering stunt, and misleading. A useful anecdote for some dynamics around AI-driven text-to-speech, audio translation.

Generative AI makes 3d content An international (Britain, China, Australia) team produced 3D-GPT, an application which turns a user’s text prompt into digital 3D output which appears in (I think) Blender. (Here’s their scholarly paper.)

3D-GPT enables LLMs to function as problem-solving agents, breaking down the 3D modeling task into smaller, manageable components, and determining when, where, and how to accomplish each segment. 3DGPT comprises three key agents:c onceptualization agent, 3D modeling agent and task dispatch agent. The first two agents collaborate harmoniously to fulfill the roles of 3D conceptualization and 3D modeling by manipulating the 3D generation functions. Subsequently, the third agent manages the system by taking the initial text input, handling subsequence instructions, and facilitating effective cooperation between the two aforementioned agents.

Why does this matter?

First, it shows generative AI moving up the media complexity stack from text and images into the more computationally intensive 3d world. Watch for more of this, especially in video and gaming. Second, it’s another example of open source work. Third, it might be useful for gaming and virtual worlds, especially if virtual and extended reality (VR, XR) projects take off.

A new math AI tool appears Speaking of open source, another group of researchers launched another AI application, this time devoted to mathematics. LLEMMA trained on Proof-Pile-2 (a HuggingFace product) to become the best math AI tool around, at least according to the authors. A lot of it is open: “LLEMMA models are open access and we open source our training data and code.” It all relies on Llama, the Meta open AI tool.

Several things strike me with LLEMMA, starting with its strong commitment to open source data and AI. It also represents a narrowly functioned app, not so broad as, say, Bard or ChatGPT, as VentureBeat notes.

Now let’s consider some developments around the technology and how we acculturate it:

About those electrical power needs Microsoft is looking for a nuclear engineer to mind an atomic plant, which will power their AIs.

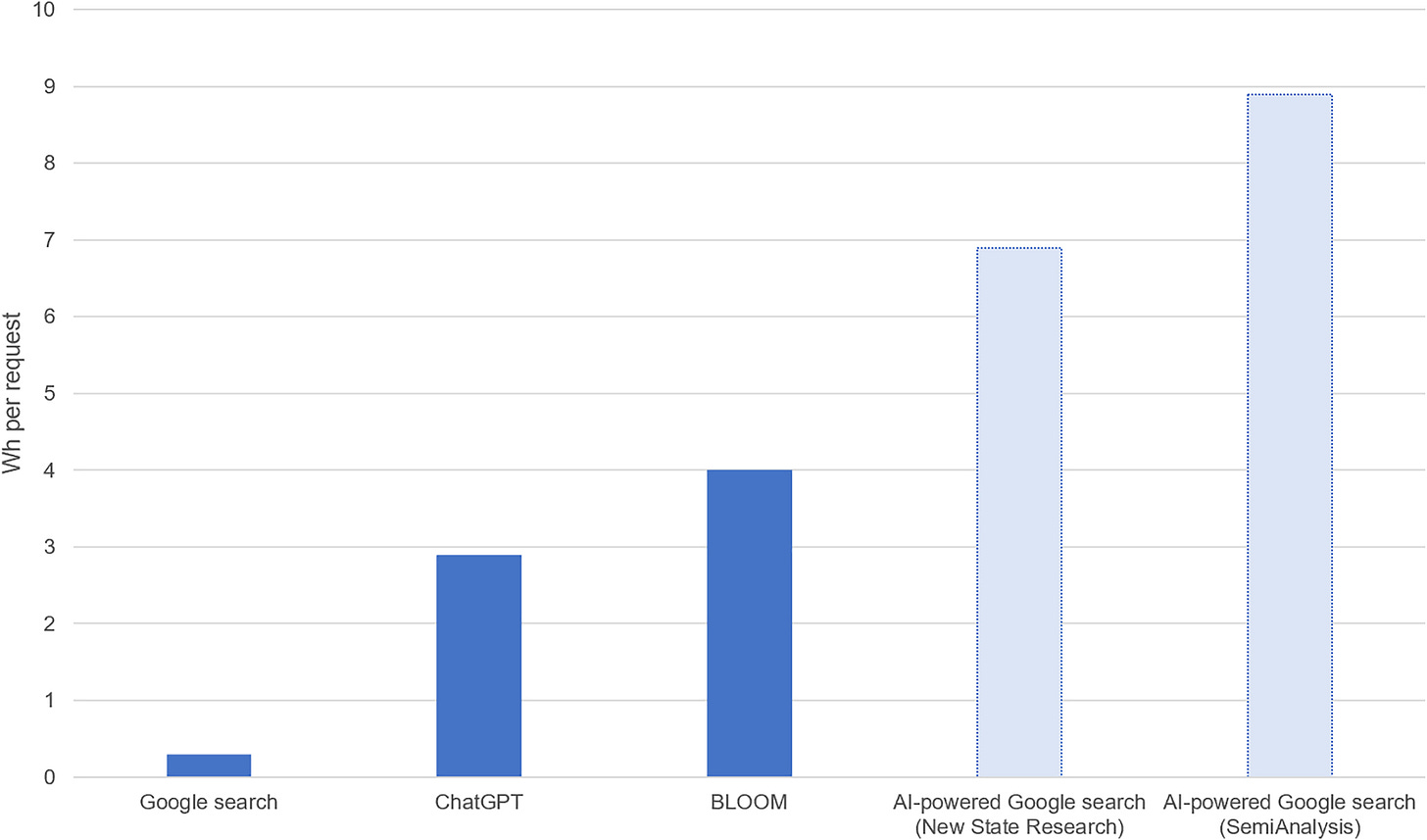

How large are AI power needs now? A new paper by Alex De Vries offers good nuance and caution in trying to understand LLMs’ electricity demand. The author notes that AI applications are very power hungry, going beyond, for example, the power Google search presently requires:

At the same time various forces might slow down massive AI-driven electrical power demand. De Vries points to chip bottlenecks, business case problems with expanding GPU and server count, and more. The subject needs more and better research.

Blocking training Web authoring service Medium has blocked AI scraping from content authors publish there as a default setting. We Medium writers (here’s me) can choose to alter the setting in order to render our stuff scrapeable and trainable, if we prefer it so.

The reasons for Medium’s stance should be familiar by now to anyone following the large volume of AI criticism:

AI companies have nearly universally broken fundamental issues of fairness: they are making money on your writing without asking for your consent, nor are they offering you compensation and credit. There’s a lot more one could ask for, but these “3 Cs” are the minimum…

To give a blunt summary of the status quo: AI companies have leached value from writers in order to spam Internet readers. [emphases in original]

Several thoughts here. First, I wonder how many entities will shift towards this default. Second, will such organizations organize together, at least in forming shared practices and language, or perhaps for something greater? The Medium post calls for such a coalition, speaking of for-profits and non-profits together. Third, will educators urge such a default stance for their campuses and publishing houses? (I asked about this way back in July.)

More lawsuits, one with a religious angle Universal Music sued Anthropic, alleging the AI service stole son lyrics. Meanwhile, another group of writers have sued AI companies. This time it’s people writing books on religion, and one of the authors is a high profile politician, Mike Huckabee. As with other suits, the charge is datasets assembled and scraped from copyrighted content:

The lawsuit accuses Meta, Microsoft, Bloomberg and the EleutherAI Institute of using a particular dataset known as Books3, which scraped information from a massive collection of nearly 200,000 pirated books.

I cite this to draw attention, once more, to the copyright threat looming over LLMs. I’m also struck by the politics here, as we see conservatives joining court challenges filed by progressives. It’ll be interesting to see how this bipartisan attack fits into very partisan politics. Perhaps opposition to generative AI is becoming a cross-the-aisle uniter.

AI reproducing racism A new study found LLMs capable of offering competent medical advice, while at the same time capable of repeating racist medical beliefs. This is due to the preexisting content software has trained on. This is also a reason for software developers to improve guardrails.

Now, for educational developments:

Endorsing an AI detector. The American Federation of Teachers (AFT) formally endorsed the chatbot detection tool ChatGPTZero. Specifically, the AFT seems to have struck a deal for member teachers (I think): “The teacher's union is paying for access to more tailored AI detection and certification tools and assistance.”

I admit to being puzzled by this story. Yes, I understand the desire for such applications for instructors concerned about students producing intellectually dishonest work. It appears to be clever for the massive AFT to use its scale to achieve an economy of scale. And yet… at a practical level I’m not sure how this plays out. I don’t know how many schools will have technology issues in supporting a third party tool they didn’t purchase. Worse, ChatGPTZero has fared horrendously so far, failing to identify AI-created content and incorrectly deeming human writing to be bot-written enough times to be unreliable. At a conceptual level I worry about the message the AFT deal sends, that plagiarism is what educators are focused on when it comes to AI.

A new AI and medical care degree The University of Texas-San Antonio announced a new degree which blends health care with AI.

Here’s a top-level sketch of the curriculum:

During the course of the new five-year program, students will obtain a doctor of medicine (MD) as well as a master of science in artificial intelligence (MSAI). In between students’ third and fourth year of medical school, they’ll take a gap year to focus on the AI master’s degree component.

Why this matters: in particular there’s the integration of AI with allied health care, something I’ve touched on previously and will refer to again. There’s the broader question of expanding AI’s role in the postsecondary curriculum, which can take the form of such degrees.

That’s it for now. I hope this research round-up is useful. Please let me know. And if you’d like me to adjust it - focusing on a particular area, writing more, writing less, etc. - the comments box awaits you.