The month of May continues to offer AI news. Today we’ll consider what’s going on in the economics world, scanning and analyzing developments from multiple angles, before June is upon us.

(If you’re new to this newsletter, welcome! My scan reports are examples of what futurists call horizon scanning, research into the present which looks for signals of potential futures. We can use those signals for trend analysis, which we can use to create glimpses of possible futures. On this Substack I do scan reports on various domains where I see AI having an impact, such as politics, culture, education, technology (here’s this week’s earlier post)… and economics, today’s theme. I also write other kinds of issues; check the archive for examples.)

I’ve broken down what follows into categories including actual business uses of AI, business models, investment, partnerships, labor effects, and the impact of geopolitics, followed by some reflections.

Actual uses of AI

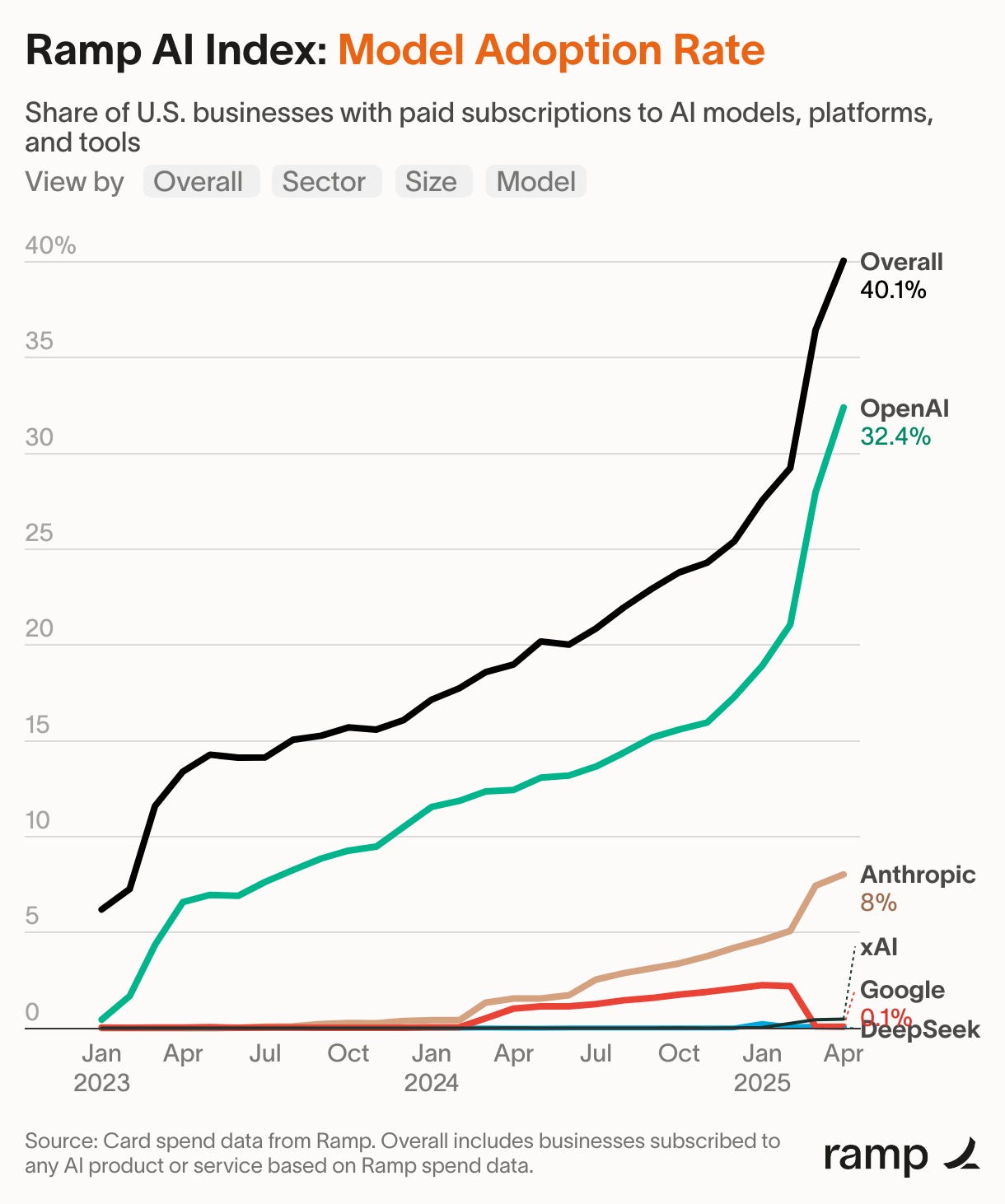

One analytics firm, Ramp, publishes their data about AI adoption. They offer this view of which AI models companies are paying for:

You can easily see OpenAI far in the lead. I’m surprised that Google isn’t higher, and Microsoft doesn’t even appear. Possibly Ramp isn’t counting their preexisting, non-AI subscriptions for Office, Docs, cloud computing, etc., even though those now contain more AI functions.

The datapoint of only 40.1% of businesses subscribing to these services is noteworthy.

Ramp also breaks their adoption data down by type of business:

Technology companies being in the lead is unsurprising. Finance is next after that, which makes sense, given that business is all about symbolic analysis and manipulation. Following those sectors is a clutch of other businesses which involve more face-to-face and bodily interaction, from health care to manufacturing. They are in an interesting position for AI-enabled robotics down the road.

AI business models evolve

The quest for making generative AI profitable continues. OpenAI decided to remain a sort of nonprofit, rather than attempting to become a for-profit company.

OpenAI also stated it was expanding its abilities to help users buy and sell items. I view this as expanding its marketplace functions, competing with giants like Amazon and Google.

On a different level, Netflix is developing running ads with AI-generated content in some of its streaming video programs.

Nvidia saw massive, continued growth. They reported taking in $44.1 billion during this year’s first quarter, which represents a solid 12% rise over 2024’s fourth quarter and a staggering 69% increase from that year’s first quarter. That growth seems to be continuing, as, for example, Oracle just signed up to buy “about 400,000 Nvidia GB200 AI chips, worth about $40 billion.” The chips would go into a huge facility in Abilene, Texas, which is part of the Stargate initiative.

Investment in AI continues

Bubble jitters and opposition to AI haven’t slowed down the venture funding firehose. Grammarly announced it raised $1 billion in new funding. Techcrunch reports that nineteen AI startups have each raised more than $100 million in 2025’s first three months, with Anthropic and Open AI eliciting billions.

Interestingly, some concerns about AI are now the source of business models. One company which targets generative AI coding problems, Endor Labs, won $70 million. A former Y Combinator executive launched the Safe Artificial Intelligence Fund (SAIF), “dedicated to supporting startups developing tools to enhance AI safety, security, and responsible deployment.”

AI money is flowing beyond the United States. The European Commission published its “AI Continent Action Plan” which celebrates spending hundreds of billions of euros on the technology, along with making available huge factories and the ambition to create a large data market. They also commit to a major education initiative:

educate and train the next generation of AI experts based in the EU

incentivise European AI talent to stay and to return to the EU

attract and retain skilled AI talent from non-EU countries, including researchers.

On another continent one AI investment possibility turns on providing clean energy. The nation of Brazil is apparently pitching itself as an AI hosting location because renewables provide most of its electricity.

“Our message to the world, on the basis of our plan, is that AI [power demand] is satiable with usage of renewable energy sources,” [said] Luis Manuel Rebelo Fernandes, Brazil’s deputy minister of science, technology, and innovation.

Beyond money pouring into AI companies, things are getting more complex. Some of the investees are investing in other firms. The most invested-in company, OpenAI, then is trying to purchase another company, spending billions to obtain AI coding provider Windsurf. Note the latter offers agentic AI. Meanwhile Google launched an investment initiative, its AI Futures Fund.

More partnerships appear

At the same time a group of new businesses have appeared which broker relationships between AI companies and intellectual property owners:

Fledgling groups such as Pip Labs, Vermillio, Created by Humans, ProRata, Narrativ and Human Native are building tools and marketplaces where writers, publishers, music studios and moviemakers can be paid for allowing their content to be used for AI training purposes.

Note that this is often paywalled or otherwise inaccessible material:

“The licensing of content that doesn’t exist on the open internet is going to be a big business,” said Dan Neely, chief executive and co-founder of Vermillio, which works with major studios and music labels such as Sony Pictures and Sony Music.

Speaking of which, the New York Times is partnering with Amazon to share the former’s intellectual property with the latter’s AI. Yes, that’s the same New York Times which is suing other AI companies for intellectual property infringment.

On a different partnership level, Apple is apparently hoping third party developers will build content with its (ahem) Apple Intelligence service. “The iPhone maker is working on a software development kit and related frameworks that will let outsiders build AI features based on the large language models that the company uses for Apple Intelligence…” I wrote “apparently” because Apple is notoriously a black box and this was a leak before a big event. We will see.

AI and the world of work: hiring pause or not ready for prime time?

The advance of generative AI into the labor market continued. Microsoft's CEO Nadella stated that “20% to 30% of code inside the company's repositories was ‘written by software’ — meaning AI.” Shopify’s CEO told managers asking for new staff that they would have to prove AI couldn’t do the requisite jobs. On a similar note the CEO of language learning app Duolingo, Luis von Ahn, stated that he wanted the company to be AI-first, which included infusing the technology through its workforce:

We'll gradually stop using contractors to do work that AI can handle;

AI use will be part of what we look for in hiring [and] performance reviews;

Headcount wil only be given if a team cannot automate more of their work

Anthropic’s CEO proclaimed the era of manager-nerds was nigh.

In Europe, a leading financial executive insisted on the essential role AI plays in work. This is the head of Statens pensjonsfond, Norway’s Government Pension Fund:

Chief Executive Officer Nicolai Tangen, who recently told lawmakers in Oslo that the technology can help keep the fund’s headcount from growing in the near future, says he has been running around “like a maniac” since 2022 to convince his roughly 670 staff to use AI.

“It can’t be voluntary. It isn’t voluntary to use AI or not,” Tangen said in an interview. “If you don’t use it, you will never be promoted. You won’t get a job.”

Note Bloomberg’s description of the implications: “Tangen sees no future at Norway’s $1.8 trillion sovereign wealth fund for employees who resist using artificial intelligence in their jobs.” In another Bloomberg article appears this resonant phrase: the AI hiring pause is here.

Going even further is Mechanize, a startup attempting to “enable the full automation of the economy.” Its founder, Tamay Besiroglu, wrote on X/Twitter that their goal was to “enable the full automation of all work.”

And yet. Carnegie Mellon researchers simulated a company staffed entirely by AI agents and found terrible results. According to one summary, the professors

instructed artificial intelligence models from Google, OpenAI, Anthropic, and Meta to complete tasks a real employee might carry out in fields such as finance, administration, and software engineering. In one, the AI had to navigate through several files to analyze a coffee shop chain's databases. In another, it was asked to collect feedback on a 36-year-old engineer and write a performance review. Some tasks challenged the models' visual capabilities: One required the models to watch video tours of prospective new office spaces and pick the one with the best health facilities.

The results weren't great: The top-performing model, Anthropic's Claude 3.5 Sonnet, finished a little less than one-quarter of all tasks. The rest, including Google's Gemini 2.0 Flash and the one that powers ChatGPT, completed about 10% of the assignments. There wasn't a single category in which the AI agents accomplished the majority of the tasks, says Graham Neubig, a computer science professor at CMU and one of the study's authors.

The paper concludes thusly:

[C]urrent state-of-the-art agents fail to solve a majority of the tasks, suggesting that there is a big gap for current AI agents to autonomously perform most of the jobs a human worker would do, even in a relatively simplified benchmarking setting. Looking at how different models perform on different types of tasks, we argue that tasks that involve social interaction with other humans, navigating through complex user interfaces designed for professionals, and tasks that are typically performed in private, without a significant open and publicly available resources, are the most challenging.

That is not the end of the story, though.

However, we believe that currently new LLMs are making significant progress: not only are they becoming more and more capable in terms of raw performance, but also more cost-efficient (e.g. Gemini 2.0 Flash). Open-weights models are closing the gap between proprietary frontier models too, and the newer models are getting smaller (e.g. Llama 3.3 70B) but with equivalent performance to previous huge models, also showcasing that efficiency will further improve.

Geopolitics hits AI economics

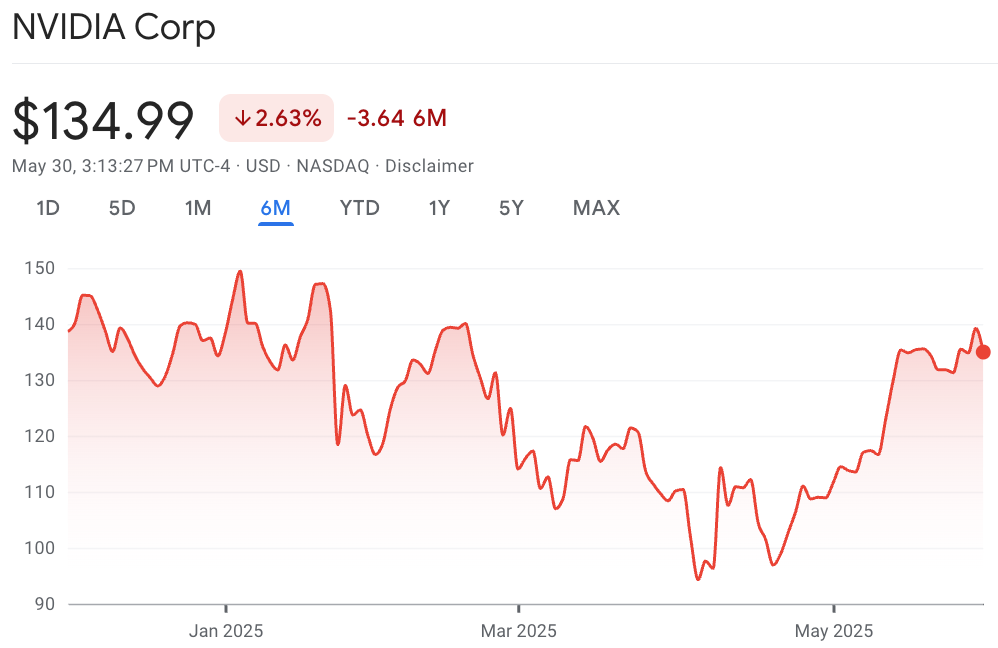

The world of AI’s economics is embedded in the broader world, which means national politics and geopolitics have impacted it. For example, we mentioned NVidia doing great in terms of revenue, but Trump’s tariff attack in April combined with America’s increased hostility to Beijing clobbered the company’s stock value as a result:

A lot went into that decline. As Yahoo! Finance put it in April,

Nvidia stock spiraled after the chipmaker disclosed in a regulatory filing this month that the US government had effectively banned exports of its H20 chips made specifically for the Chinese market to comply with ever-tightening US trade rules. The company said it would record a $5.5 billion loss from the policy change, with JPMorgan analysts estimating the ban would shave up to $16 billion from Nvidia's revenue this year.

Trump’s, ah, TACO-flavored retreat from high tariffs against China seems to have helped NVidia rebound.

Similarly, the geopolitical world of Trump’s tariff campaign hit the Stargate initiative. Before the Oracle-Nvidia deal, Bloomberg reported that contracts weren’t signed yet:

Preliminary talks with dozens of lenders and alternative asset managers — from Mizuho to JPMorgan to Apollo Global Management to Brookfield Asset Management — kicked off earlier this year. But no deals have ensued, as financiers reassess data centers in the wake of growing economic volatility and cheaper AI services, according to people familiar with the matter, who asked not to be named as the information is not public.

So what do we make of all of these developments?

Overall, we could view this as a snapshot of the AI sector booming, powered by investment and partnerships, increasingly weaving its way into various parts of the economy, from management and finance to work. Ramp’s data is a good example of this rising curve view. Skeptics and opponents might demur, viewing the preceding as a bubble’s final inflation before it bursts.

It’s a slippery environment. Investment is continuing to pour in but profits aren’t clear. OpenAI is going through an ontological crisis but remains the world’s generative AI leader. New business models and partnerships are fermenting. It’s not a mature environment yet. There’s plenty of room for churn.

I’m interested in how geopolitics impacts AI economics. Trump is a study in contradictions, boosting AI in January, following Project 2025’s theme, then throwing a spanner in the works in April with tariffs. The US-China cold war is powering yet dividing AI efforts.

It seems like Stargate is proceeding, despite January’s Deepseek shock. Presumably the Trump administration is playing some supporting role.

There’s also a nearly dialectical movement going on with business models echoing anti-AI criticism. In my last post I mentioned Anthropic and Google trying to offer services addressing the problems they give rise to. Today I’m struck by new startups attempting to make bank on both AI companies running out of training sets and content holders fearing AI misuse of their material.

Last note: the workforce automation problem remains open. Xu, Song, Li et al showed agentic workers to be a shambles, but the pressure is massive to make AI labor happen. It’s not clear to me if we’ll see more workers rendered obsolete or most workers maintaining jobs transformed by AI. I’m not seeing much evidence for the emergence of new jobs or job categories, which was the industrial ages’ pattern when innovations hit. It’s still hazy, overall. I’ll build up some scenarios if there’s interest.

Let me close by asking: what are you seeing of economics and AI?

Thanks so much for taking the time to do this particular horizon scan. I'm on an AI panel (at a brewery, no less) next week, and this research is helping me think through the AI economy.

Thank you!